Table Of Contents

1. Introduction

Autonomous agent systems represent a paradigm shift in enterprise technology—intelligent systems that perceive environments, make decisions, and execute actions with minimal human oversight. While these capabilities unlock unprecedented operational efficiency and innovation potential, they simultaneously introduce complex security, privacy, and resilience challenges that traditional cybersecurity frameworks weren't designed to address.

The stakes are substantial. Autonomous agents operate with elevated privileges, access sensitive data across organizational boundaries, and make consequential decisions that impact customers, employees, and business operations. A compromised agent can exfiltrate intellectual property, manipulate critical workflows, generate fraudulent transactions, or make decisions that expose organizations to regulatory penalties and reputational damage.

Unlike conventional software vulnerabilities where attack surfaces are relatively well-defined, autonomous agents introduce dynamic risk profiles. These systems learn from data, adapt to new contexts, interact with external APIs, and generate unpredictable outputs—each capability creating potential security exposures. The interconnected nature of agent systems means vulnerabilities can cascade, with a single compromised agent potentially affecting entire agent ecosystems.

This comprehensive guide examines the security, privacy, and resilience requirements for enterprise autonomous agent deployments. As organizations navigate the complexities outlined in Generative AI and Autonomous Agents in the Enterprise: Opportunities, Risks, and Best Practices, establishing robust security foundations becomes not merely best practice but existential necessity for sustainable AI adoption.

2. Understanding Core Security Risks in Autonomous Agent Systems

Autonomous agents introduce threat vectors that extend beyond traditional application security concerns. Understanding these risks provides the foundation for comprehensive security strategies.

2.1 Data Exposure and Confidentiality Threats:

Agents require access to diverse data sources to function effectively—customer records, financial information, operational data, and proprietary algorithms. This broad data access creates significant exposure risks. Agents might inadvertently include sensitive information in outputs, leak data through verbose logging, or become conduits for unauthorized data exfiltration if compromised.

The challenge intensifies with multi-agent systems where information flows between specialized agents. Data that's appropriately accessible to one agent may be highly sensitive if exposed to others. Without granular access controls and data lineage tracking, organizations struggle to maintain confidentiality as complexity scales.

2.2 Prompt Injection and Model Manipulation:

Prompt injection attacks exploit the conversational interfaces of generative AI agents, embedding malicious instructions within seemingly benign inputs. Attackers can manipulate agents into ignoring security constraints, revealing system prompts, accessing unauthorized data, or executing unintended actions. These attacks prove particularly dangerous because they weaponize the agent's core functionality—its ability to interpret and act on natural language instructions.

Model manipulation extends beyond prompt injection to include data poisoning, where adversaries introduce corrupted training data that degrades model performance or creates backdoors. Adversarial examples—carefully crafted inputs designed to fool models—can cause agents to misclassify data or make incorrect decisions with potentially severe consequences.

2.3 System Integration Vulnerabilities:

Autonomous agents don't operate in isolation—they integrate with enterprise systems, external APIs, databases, and other agents. Each integration point represents a potential vulnerability. Agents that execute code, modify databases, or trigger workflows can become vectors for broader system compromise if not properly sandboxed and monitored.

API security becomes critical as agents increasingly orchestrate complex workflows by chaining multiple service calls. Insufficient authentication, authorization bypasses, or excessive API permissions enable compromised agents to cause damage far beyond their intended scope.

2.4 Authentication and Authorization Challenges:

Determining appropriate privilege levels for autonomous agents creates complex tradeoffs. Too restrictive, and agents cannot perform their intended functions. Too permissive, and organizations create unnecessary risk exposure. Traditional role-based access control (RBAC) often proves insufficient for dynamic agent behaviors that require context-dependent permissions.

The challenge compounds with agent impersonation risks. If agents act on behalf of users, clear attribution mechanisms must distinguish agent actions from human actions for accountability, audit trails, and security forensics.

3. Privacy Protection and Ethical Data Governance

Privacy considerations for autonomous agents extend beyond regulatory compliance to encompass ethical data stewardship and user trust preservation.

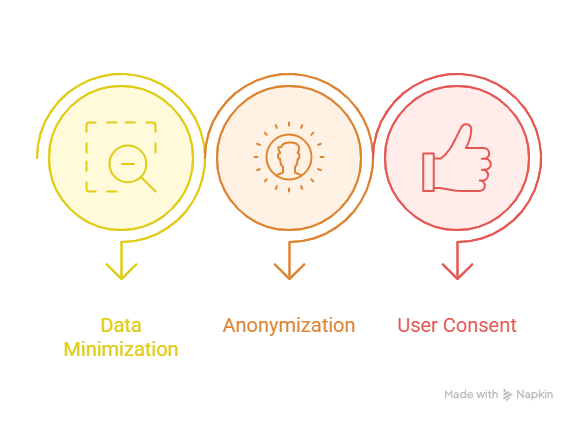

3.1 Data Minimization and Purpose Limitation:

Implementing privacy-by-design principles requires agents to access only data necessary for specific tasks. Organizations should establish granular data access policies that enforce purpose limitation—ensuring agents cannot access data beyond what's required for their designated functions. This approach reduces exposure risk while supporting compliance with privacy regulations.

Architecting systems with Data Fabric and Knowledge Graphs: The Circulatory System of the AI Enterprise enables sophisticated data governance that tracks provenance, enforces access controls, and maintains visibility into how agents consume and process information across distributed environments.

3.2 Anonymization and Differential Privacy:

When agents must process sensitive personal data, organizations should implement privacy-enhancing technologies. Differential privacy techniques add calibrated noise to datasets, enabling agents to learn useful patterns while protecting individual privacy. Anonymization and pseudonymization reduce re-identification risks when agents handle personal information.

Homomorphic encryption represents an emerging frontier—enabling computation on encrypted data without decryption. While computationally intensive, this technology allows agents to process sensitive information without ever accessing plaintext data, dramatically reducing exposure risk.

3.3 User Consent and Transparency:

Organizations must clearly communicate when autonomous agents process personal data, what purposes justify processing, and how individuals can exercise privacy rights. Transparency requirements extend to explaining agent decision-making processes, particularly for decisions that significantly impact individuals.

Privacy-preserving agent architectures should include mechanisms for users to review, correct, or request deletion of their data from agent systems. Implementing these capabilities requires careful system design that maintains data lineage across complex agent workflows.

3.4 Cross-Border Data Governance:

Autonomous agents often operate across jurisdictional boundaries, creating data sovereignty challenges. Organizations must implement technical controls that enforce geographic data restrictions, ensuring agents don't inadvertently transfer personal data across borders in violation of regulations like GDPR or emerging data localization requirements.

4. Building Resilient Agent Architectures

Resilience ensures autonomous agent systems continue functioning correctly despite attacks, failures, or unexpected conditions. Comprehensive resilience strategies address multiple failure modes simultaneously.

4.1 Layered Security Defense:

Defense-in-depth architectures implement multiple independent security controls, ensuring that single vulnerabilities don't compromise entire systems. For autonomous agents, layered security includes:

- Input validation and sanitization to prevent prompt injection and malicious data introduction

- Output filtering to prevent sensitive data leakage and ensure generated content meets safety standards

- Execution sandboxing that isolates agent operations from critical systems, limiting blast radius of compromises

- Network segmentation that restricts agent communication paths to only necessary services

- Least privilege access controls ensuring agents receive minimum permissions required for functionality

4.2 Continuous Monitoring and Anomaly Detection:

Real-time monitoring systems track agent behavior, identifying deviations from expected patterns that might indicate compromise or malfunction. Effective monitoring captures multiple signal types—API call patterns, data access behaviors, output characteristics, resource consumption, and decision outcomes.

Machine learning-based anomaly detection systems can identify subtle behavioral changes that rule-based monitoring might miss. However, these systems require careful tuning to balance sensitivity against false positive rates that create alert fatigue.

Security information and event management (SIEM) integration ensures agent security telemetry flows into enterprise security operations centers, enabling coordinated threat detection and response across hybrid human-agent environments.

4.3 Sandbox Testing and Red Teaming:

Before production deployment, organizations should rigorously test agent security through sandbox environments that simulate production conditions without risk exposure. Sandbox testing should include adversarial exercises where security teams attempt to compromise, manipulate, or abuse agent systems.

Red teaming exercises—structured attack simulations—help organizations discover vulnerabilities before adversaries do. These exercises should specifically target agent-unique attack vectors like prompt injection, tool manipulation, and multi-agent coordination exploits.

4.4 Rollback Capabilities and Circuit Breakers:

Resilient architectures include mechanisms to quickly disable or roll back agent deployments when security issues emerge. Circuit breaker patterns automatically halt agent operations when error rates, anomalous behaviors, or security indicators exceed acceptable thresholds.

Version control and deployment pipelines should support rapid rollback to previous agent configurations, enabling organizations to quickly revert problematic updates while investigating issues.

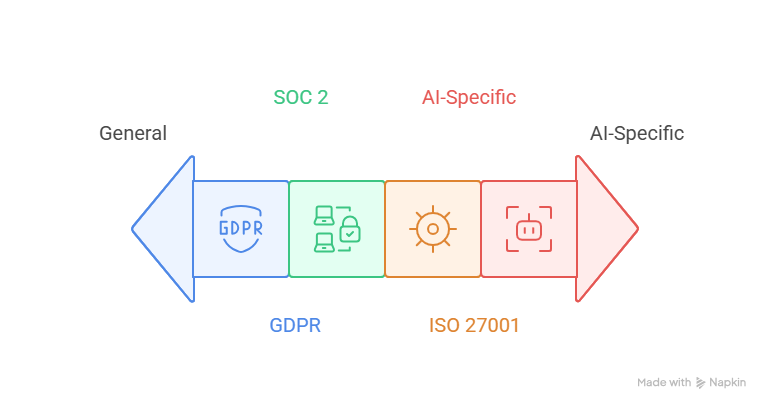

5. Compliance Frameworks and AI Security Governance

Regulatory compliance and governance frameworks provide structure for autonomous agent security programs, ensuring consistent security postures that meet legal and industry requirements.

5.1 GDPR and Privacy Regulations:

The General Data Protection Regulation establishes stringent requirements for personal data processing that directly impact autonomous agent deployments. Organizations must ensure agents implement privacy principles including lawfulness, fairness, transparency, purpose limitation, data minimization, accuracy, storage limitation, integrity, and confidentiality.

GDPR's accountability principle requires organizations to demonstrate compliance through documentation, data protection impact assessments (DPIAs), and technical measures. Autonomous agents processing personal data of EU residents must undergo DPIAs that identify privacy risks and document mitigation strategies.

The right to explanation creates particular challenges for opaque AI systems. Organizations should implement explainability mechanisms that enable transparent communication about how agents make decisions affecting individuals, particularly for high-stakes scenarios like employment, credit, or healthcare decisions.

5.2 SOC 2 and Security Controls:

Service Organization Control (SOC) 2 compliance demonstrates that organizations implement appropriate security, availability, processing integrity, confidentiality, and privacy controls. For enterprises offering autonomous agent services to customers, SOC 2 compliance provides independent verification of security postures.

SOC 2 requirements translate to specific agent security controls—access management, change management, logical and physical security, system operations, and risk mitigation. Organizations should map agent security architectures to SOC 2 trust service criteria, identifying and addressing gaps.

5.3 ISO 27001 and Information Security Management:

ISO 27001 provides comprehensive information security management system (ISMS) frameworks applicable to autonomous agent deployments. The standard's risk-based approach aligns well with agent security challenges, requiring organizations to identify information security risks, implement appropriate controls, and continuously monitor effectiveness.

Implementing ISO 27001 for agent systems involves extending existing ISMS frameworks to address agent-specific risks—model security, training data protection, prompt injection prevention, and multi-agent coordination security. Organizations should update risk assessments, security policies, and control implementations to explicitly address autonomous agent technologies.

5.4 AI-Specific Security Standards:

Emerging standards specifically address AI system security and trustworthiness. NIST's AI Risk Management Framework provides guidance for managing AI risks throughout system lifecycles. ISO/IEC standards for AI trustworthiness and robustness offer additional frameworks for autonomous agent governance.

Organizations should monitor evolving AI-specific regulations, including the EU AI Act's risk-based classification system that imposes varying requirements based on AI system risk levels. High-risk autonomous agents may face mandatory conformity assessments, transparency requirements, and human oversight obligations.

6. Implementing AI Security Governance

Effective governance translates security frameworks into operational practices that ensure consistent security postures across autonomous agent portfolios.

6.1 Security-by-Design Principles:

Security should be integrated throughout agent development lifecycles rather than added as an afterthought. Security-by-design requires threat modeling during architecture phases, secure coding practices during development, security testing before deployment, and continuous security monitoring post-deployment.

Organizations implementing AI Integration Best Practices for Business should embed security checkpoints at each integration stage, ensuring agents meet security standards before connecting to production systems and data.

6.2 Agent Risk Classification:

Not all autonomous agents carry equal risk. Organizations should implement risk classification systems that categorize agents based on data sensitivity, privilege levels, decision impact, and operational criticality. High-risk agents warrant more stringent security controls, testing requirements, and monitoring than lower-risk counterparts.

Risk classifications should inform deployment decisions, resource allocation, and governance intensity. Critical agents might require additional security reviews, penetration testing, and ongoing audit while lower-risk agents follow streamlined processes.

6.3 Incident Response Planning:

Organizations need specialized incident response procedures for autonomous agent compromises. Traditional incident response playbooks require adaptation to address agent-specific scenarios—prompt injection attacks, model poisoning, agent impersonation, or multi-agent cascading failures.

Incident response plans should clearly define detection mechanisms, containment procedures, investigation protocols, remediation steps, and communication strategies for agent security incidents. Regular tabletop exercises help teams practice coordinated responses to agent security events.

6.4 Third-Party Agent Security:

Many organizations leverage third-party agent platforms and services. Vendor security assessments become critical—organizations must evaluate vendor security postures, data handling practices, incident response capabilities, and compliance certifications. Contractual agreements should specify security requirements, data protection obligations, and breach notification procedures.

Supply chain security extends to agent dependencies—foundation models, libraries, APIs, and datasets. Organizations should maintain inventories of agent dependencies, monitor for vulnerabilities, and implement processes for rapid patching when security issues emerge.

7. Actionable Best Practices for Secure Agent Deployment

Translating security principles into practice requires concrete implementation strategies that balance security with operational effectiveness.

Implement Zero Trust Architecture:

Apply zero trust principles to agent systems—never trust, always verify. Authenticate and authorize every agent action regardless of network location or previous interactions. Implement micro-segmentation that isolates agents from unnecessary system access. Continuously verify agent identity and behavior rather than assuming trusted status.

Establish Clear Human Oversight:

Maintain human-in-the-loop controls for high-impact agent decisions. Implement approval workflows for actions exceeding risk thresholds. Design systems where humans can interrupt, override, or reverse agent actions when necessary. Balance automation efficiency against accountability and risk management needs.

Conduct Regular Security Assessments:

Schedule periodic security assessments that include vulnerability scanning, penetration testing, and compliance audits specifically targeting agent systems. Assessments should evolve alongside agent capabilities, addressing new attack vectors as agents gain additional functionalities.

Invest in Security Training:

Ensure development teams, security personnel, and business stakeholders understand agent-specific security risks and best practices. Training should cover secure agent development, security testing techniques, threat detection, and incident response procedures tailored to autonomous agent environments.

Maintain Comprehensive Audit Trails:

Implement detailed logging that captures agent decisions, data access, API calls, and actions taken. Audit trails enable security forensics, compliance demonstration, and accountability. Ensure logs are tamper-resistant and retained according to regulatory and business requirements.

Adopt Secure Development Practices:

Apply secure software development lifecycle (SDLC) practices to agent development—code reviews, static analysis, dependency scanning, and security testing. Version control all agent components including prompts, configurations, and model weights. Implement deployment pipelines with automated security checks.

8. Conclusion

Security, privacy, and resilience represent foundational requirements for sustainable autonomous agent adoption rather than optional enhancements. As these systems assume increasingly critical enterprise roles, the consequences of security failures escalate proportionally. Organizations that prioritize comprehensive security strategies—layered defenses, privacy-by-design, continuous monitoring, and robust governance—position themselves to capture agent benefits while managing inherent risks.

The security landscape for autonomous agents continues evolving as both capabilities and threats advance. Emerging attack techniques, new regulatory requirements, and increasing system complexity demand adaptive security postures that evolve alongside agent technologies. Organizations should view agent security as ongoing journeys rather than destination states, investing in continuous improvement, threat intelligence, and security innovation.

Success requires collaboration across disciplines—security professionals, AI engineers, privacy officers, legal counsel, and business leaders must work together to balance innovation velocity with risk management. By embedding security throughout agent lifecycles, maintaining transparency with stakeholders, and committing to ethical data practices, enterprises can confidently deploy autonomous agents that enhance capabilities without compromising security, privacy, or organizational resilience.

9. Frequently Asked Questions

What are the most critical security risks unique to autonomous AI agents?

The most critical agent-specific risks include prompt injection attacks that manipulate agent behavior through crafted inputs, data poisoning that corrupts training data to create backdoors or degrade performance, excessive privilege exploitation where compromised agents access unauthorized systems, and cascading failures in multi-agent environments where one compromised agent affects entire ecosystems. Unlike traditional software, agents' natural language interfaces and adaptive behaviors create dynamic attack surfaces that require specialized security controls beyond conventional cybersecurity measures.

How do we balance agent autonomy with security and human oversight requirements?

Balance autonomy and security through risk-based approaches that calibrate oversight intensity to potential impact. Implement tiered authorization where low-risk routine tasks proceed autonomously while high-impact decisions require human approval. Use confidence thresholds that trigger human review when agents encounter ambiguous scenarios. Design transparent systems that explain agent reasoning, enabling effective oversight without micromanagement. Maintain circuit breakers that allow rapid human intervention when agents exhibit unexpected behaviors or security indicators emerge.

What compliance frameworks apply to autonomous agent deployments?

Multiple frameworks apply depending on industry, geography, and use case. GDPR governs agents processing personal data of EU residents, requiring data protection impact assessments and privacy-by-design implementations. SOC 2 establishes security controls for service providers deploying agents on behalf of customers. ISO 27001 provides comprehensive information security management frameworks. Industry-specific regulations like HIPAA for healthcare, PCI DSS for payment processing, and emerging AI-specific regulations like the EU AI Act impose additional requirements. Organizations should conduct compliance assessments covering all applicable frameworks and implement comprehensive governance addressing overlapping requirements.

How can we protect against prompt injection and other AI-specific attacks?

Protect against prompt injection through multiple defensive layers—input validation that sanitizes user inputs before agent processing, prompt engineering that uses delimiters separating system instructions from user content, output filtering that prevents sensitive information leakage, and constitutional AI approaches that embed security constraints into agent training. Implement privilege separation where agents cannot execute high-risk actions without additional authorization. Regular red teaming exercises help identify injection vulnerabilities before adversaries exploit them. Monitor agent outputs for anomalous patterns indicating potential manipulation.

What role does explainability play in agent security and compliance?

Explainability serves multiple security and compliance functions. Transparent decision-making enables security teams to identify when agents behave unexpectedly, facilitating threat detection. Audit trails documenting agent reasoning support compliance demonstration and forensic investigation after security incidents. Explainability mechanisms help satisfy regulatory requirements like GDPR's right to explanation and emerging AI transparency mandates. Additionally, understanding agent reasoning enables more effective security testing—teams can verify that agents make decisions for intended reasons rather than exploiting spurious correlations or vulnerabilities.

How frequently should we conduct security assessments for autonomous agent systems?

Conduct comprehensive security assessments quarterly at minimum, with more frequent assessments for high-risk agents or rapidly evolving deployments. Continuous automated monitoring should run constantly, detecting anomalies and security indicators in real-time. Perform targeted assessments after significant agent updates, capability expansions, or when new attack techniques emerge. Major architectural changes warrant immediate security reviews before deployment. Participate in industry threat intelligence sharing to stay current on agent-specific vulnerabilities and adjust assessment frequency based on threat landscape evolution. Balance thoroughness against operational agility—overly burdensome assessment processes slow innovation while insufficient assessment creates unacceptable risks.