Table Of Contents

1. Introduction

The enterprise adoption of generative AI-driven agents has moved beyond experimental pilots into production-scale deployments. Organizations are investing heavily in autonomous systems that can reason, plan, and execute complex workflows with minimal human intervention. However, as these investments grow, a critical question emerges: How do we effectively measure success and quantify return on investment?

Unlike traditional software implementations where ROI calculations follow established patterns, generative AI agents introduce unique measurement challenges. These systems learn, adapt, and operate with varying degrees of autonomy, making conventional performance metrics insufficient. Enterprises need comprehensive frameworks that capture both tangible financial returns and intangible value creation across productivity gains, operational efficiency, innovation velocity, and strategic capabilities.

This comprehensive guide explores the methodologies, metrics, and best practices for measuring success in generative AI agent deployments. We'll examine proven evaluation frameworks that help organizations move beyond anecdotal success stories toward data-driven ROI quantification. Whether you're in the early stages of exploring Generative AI and Autonomous Agents in the Enterprise: Opportunities, Risks, and Best Practices or scaling existing deployments, understanding how to measure performance is fundamental to sustainable value realization.

2. Establishing the ROI Framework for AI Agents

Before measuring returns, organizations must establish a structured framework that accounts for both direct and indirect value creation. Traditional ROI calculations focus primarily on cost reduction, but generative AI agents deliver value across multiple dimensions that require nuanced measurement approaches.

The Multi-Dimensional Value Framework includes:

- Cost Efficiency Metrics: Direct cost savings from automation, reduced error rates, and decreased manual intervention requirements

- Productivity Enhancement: Time saved per task, throughput improvements, and capacity augmentation for existing teams

- Quality Improvements: Accuracy rates, consistency metrics, and reduction in human error

- Innovation Velocity: Time-to-market acceleration, experimentation capacity, and creative solution generation

- Strategic Capabilities: New service offerings enabled, competitive advantages gained, and market position improvements

The foundation of effective ROI measurement begins with clearly defined baseline metrics established before agent deployment. These baselines should capture current state performance across all relevant dimensions, creating reference points against which improvements can be quantified. Without rigorous baseline measurement, organizations risk attributing natural business variations to AI agent performance.

3. Key Performance Indicators for Autonomous Agents

Measuring generative AI agent success requires a balanced scorecard approach that evaluates technical performance, business impact, and user experience simultaneously. No single metric tells the complete story—comprehensive evaluation demands multi-faceted KPI tracking.

3.1 Technical Performance Metrics:

Task completion rates measure the percentage of assigned tasks that agents successfully complete without human intervention. Industry benchmarks suggest well-implemented agents should achieve 85-95% autonomous completion rates for structured tasks, with lower rates expected for complex, ambiguous workflows.

Response accuracy and quality scores evaluate the correctness and usefulness of agent outputs. These metrics often require human evaluation frameworks or automated quality assessment systems that compare agent outputs against gold-standard responses. Organizations implementing AI Integration Best Practices for Business typically establish quality thresholds before scaling deployments.

Latency and throughput measurements track how quickly agents process requests and how many concurrent tasks they can handle. These metrics directly impact user experience and determine scalability limits for production deployments.

3.2 Business Impact Metrics:

Cost per transaction represents one of the most tangible ROI indicators, comparing the expense of agent-completed tasks versus human-completed equivalents. Leading organizations report cost reductions ranging from 40-70% for routine cognitive tasks after accounting for infrastructure, maintenance, and oversight costs.

Time-to-resolution improvements measure how much faster agents complete tasks compared to traditional methods. Customer service agents, for example, often reduce average handling time by 30-50% while maintaining or improving satisfaction scores.

Capacity multiplier effects quantify how agents enable existing teams to accomplish more. Rather than simple replacement economics, many successful implementations augment human capabilities, allowing teams to handle 2-3x their previous workload without proportional headcount increases.

3.3 User Experience Metrics:

Human satisfaction scores from both end-users and employees collaborating with agents provide critical insight into practical effectiveness. High technical performance means little if users find systems frustrating or unreliable. Regular surveys, feedback sessions, and usage analytics help organizations understand actual value delivery.

Adoption rates and engagement levels indicate whether users trust and actively leverage AI agents. Low adoption despite high capability suggests training gaps, trust deficits, or workflow integration issues that undermine ROI realization.

4. Quantifying Cost Savings and Productivity Gains

The most direct ROI component comes from quantifiable cost savings and productivity improvements. Rigorous measurement requires detailed tracking across multiple cost categories.

4.1 Direct Labor Cost Reduction:

Calculate the number of hours saved by autonomous agent execution multiplied by the fully-loaded cost of human labor for equivalent tasks. This calculation should include not just base wages but benefits, overhead allocation, and opportunity costs. For example, if an agent handles 1,000 customer inquiries monthly that would require 250 human hours at $50 per hour fully-loaded cost, the monthly savings total $12,500.

4.2 Error Reduction Economics:

Mistakes carry costs—rework, customer dissatisfaction, compliance penalties, and reputation damage. Agents that reduce error rates deliver measurable value. Organizations should track error frequency before and after agent deployment, then calculate the average cost per error to quantify savings.

4.3 Operational Efficiency Improvements:

Beyond direct task automation, agents often improve overall process efficiency by eliminating bottlenecks, reducing wait times, and enabling 24/7 operation. These benefits require careful measurement of end-to-end process metrics rather than isolated task performance.

Implementing robust measurement within an AI-Native Enterprise Architecture: The Backbone of Digital Intelligence ensures data flows seamlessly from agent systems into analytics platforms, enabling real-time ROI tracking and continuous optimization.

5. Measuring Innovation Velocity and Strategic Value

While cost savings provide tangible ROI evidence, generative AI agents often deliver their greatest value through accelerated innovation and expanded strategic capabilities. These benefits require different measurement approaches.

5.1 Innovation Metrics:

Time-to-market reduction measures how much faster organizations can develop, test, and launch new products or features with agent assistance. Development teams using code-generation agents report 20-40% faster feature delivery, translating to competitive advantages worth millions in fast-moving markets.

Experimentation capacity tracks how many more hypotheses, prototypes, or variations teams can explore when agents handle routine development, analysis, or testing tasks. Increased experimentation often correlates with breakthrough innovations that justify AI investments many times over.

5.2 Strategic Capability Metrics:

New service offerings enabled by agent capabilities represent pure value creation. Organizations should catalog and value new revenue streams or customer offerings that wouldn't exist without autonomous agent capabilities.

Competitive positioning improvements, while harder to quantify, merit serious consideration. Market share gains, customer acquisition cost reductions, and brand perception improvements attributable to agent-enabled capabilities contribute meaningfully to overall ROI.

6. Evaluation Frameworks and Continuous Improvement

Sustainable ROI requires ongoing measurement, analysis, and optimization rather than one-time calculations. Leading organizations implement continuous evaluation frameworks that adapt as agent capabilities and business contexts evolve.

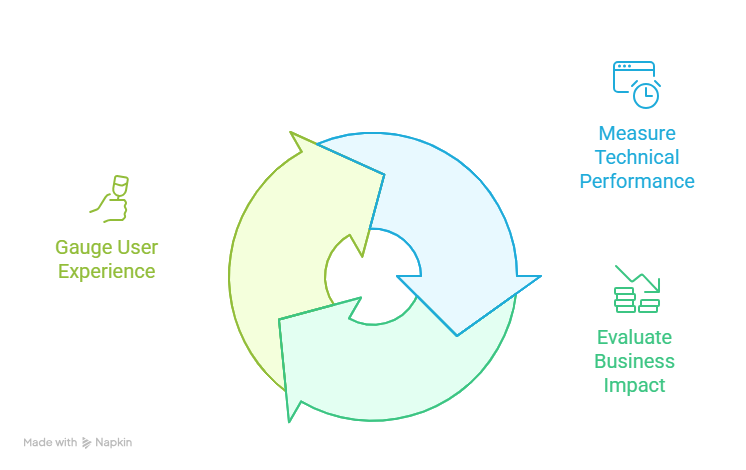

6.1 The Continuous Evaluation Cycle:

Baseline establishment creates initial reference points before agent deployment, measuring current performance across all relevant metrics. These baselines must be comprehensive and well-documented to support accurate ROI calculations.

Regular performance reviews should occur monthly or quarterly, comparing current metrics against baselines and previous periods. These reviews identify performance trends, emerging issues, and optimization opportunities.

Benchmark comparisons against industry standards and peer organizations provide external context for internal performance. Organizations should participate in industry forums and research initiatives to access comparative data.

6.2 A/B Testing and Controlled Experiments:

For critical workflows, implement controlled experiments where agent and human performance can be directly compared under similar conditions. These experiments provide the highest quality data for ROI validation and identify specific scenarios where agents excel or struggle.

6.3 Human-Agent Collaboration Efficiency:

Perhaps the most important and challenging metric involves measuring the effectiveness of human-agent collaboration. The best implementations don't simply replace humans but create productive partnerships where each contributes their strengths. Track metrics like handoff efficiency, escalation rates, and collaborative output quality to optimize these partnerships.

7. Addressing Common Measurement Challenges

Organizations encounter several consistent challenges when measuring AI agent ROI. Anticipating and addressing these obstacles improves measurement accuracy.

7.1 Attribution Complexity:

When agents work alongside humans and other systems, isolating their specific contribution becomes difficult. Implement tracking mechanisms that clearly identify agent versus human contributions, and use statistical methods to estimate collaborative value creation.

7.2 Long-Term Value Realization:

Some benefits, particularly around innovation and strategic positioning, manifest gradually over months or years. Establish both short-term and long-term measurement frameworks, acknowledging that initial ROI calculations may understate eventual value.

7.3 Indirect and Intangible Benefits:

Employee satisfaction improvements, knowledge retention, and organizational learning represent real value that resists easy quantification. Use qualitative assessments and proxy metrics to capture these benefits without forcing artificial precision.

8. Conclusion

Measuring success and ROI for generative AI-driven agents demands comprehensive frameworks that extend beyond traditional software ROI calculations. Organizations must balance quantifiable metrics like cost savings and productivity gains with harder-to-measure benefits around innovation velocity, strategic capabilities, and competitive positioning.

The most successful implementations establish clear baselines before deployment, track multi-dimensional KPIs continuously, and adapt measurement approaches as agent capabilities and business contexts evolve. By combining rigorous financial analysis with qualitative assessments of strategic value, enterprises can confidently justify AI agent investments and optimize deployments for maximum impact.

As generative AI agents become increasingly sophisticated and deeply integrated into enterprise operations, measurement methodologies will continue advancing. Organizations that invest in robust evaluation frameworks today position themselves to maximize value from tomorrow's even more capable autonomous systems. The key isn't achieving perfect measurement precision but establishing consistent, comprehensive approaches that support data-driven decision-making and continuous improvement.

9. Frequently Asked Questions

What is a good ROI threshold for generative AI agent investments?

While ROI expectations vary by industry and use case, successful enterprise AI agent deployments typically target 200-400% ROI within 18-24 months. This accounts for initial implementation costs, ongoing maintenance, and oversight requirements. Early-stage deployments may show lower returns as organizations climb the learning curve, while mature implementations often exceed these benchmarks significantly.

How long does it take to realize positive ROI from AI agent deployments?

Most organizations begin seeing positive ROI within 6-12 months for focused use cases with clear automation potential. However, time-to-value varies considerably based on implementation complexity, organizational readiness, and use case selection. Quick wins from simple automation might deliver returns in weeks, while complex multi-agent systems may require 12-18 months for full value realization.

What metrics should we prioritize when first implementing AI agents?

Start with task completion rates, time savings per transaction, and user satisfaction scores. These foundational metrics provide immediate feedback on whether agents are delivering practical value. As implementations mature, expand measurement to include quality metrics, error rates, innovation impacts, and strategic capabilities. The key is establishing baseline measurements before deployment for accurate comparison.

How do we measure the ROI of AI agents that augment rather than replace human workers?

Focus on capacity multiplier effects and throughput improvements rather than headcount reduction. Measure how much more work teams accomplish with agent assistance, improvements in output quality, and ability to take on higher-value activities. Calculate the value of additional projects completed or revenue generated that wouldn't have been possible without agent augmentation.

Should we use the same ROI frameworks for different types of AI agents?

While core principles remain consistent, customize measurement frameworks for specific agent types and use cases. Customer service agents require different metrics than code generation agents or research agents. Develop use-case-specific KPIs while maintaining common financial and strategic metrics that enable cross-implementation comparison and portfolio-level ROI assessment.

How do we account for the cost of human oversight in ROI calculations?

Include all oversight costs in your total cost of ownership calculations—time spent reviewing agent outputs, handling escalations, providing feedback, and managing systems. Many organizations find that oversight requirements decrease over time as agents improve and teams build trust, so factor learning curves into long-term ROI projections. Well-designed systems typically require 10-20% of the time equivalent human task completion would demand.