Table Of Contents

1. Introduction

The enterprise technology landscape is undergoing a fundamental transformation. Generative AI and autonomous agents are no longer experimental technologies confined to research labs—they are becoming integral components of enterprise workflows, decision-making processes, and operational architectures. According to a recent Gartner survey of business leaders, respondents reported an average of 22.6% productivity improvement from generative AI initiatives Gartner. Meanwhile, McKinsey research projects that autonomous AI agents could generate between $2.6 trillion and $4.4 trillion in annual economic value across various industries McKinsey & Company.

This shift represents more than incremental improvement. Traditional AI systems, built primarily for prediction and classification, are being augmented—and in some cases replaced—by systems that can generate novel content, reason through complex scenarios, and operate with increasing degrees of autonomy. Deloitte predicts that 25% of enterprises using generative AI will deploy AI agents in 2025, growing to 50% by 2027 Deloitte, indicating that enterprise leaders increasingly view generative AI and autonomous agents as strategic imperatives rather than tactical enhancements.

2. The Shift from Predictive AI to Generative and Agentic AI

Traditional AI systems have served enterprises well for specific, well-defined tasks. They excel at pattern recognition, classification, and prediction based on historical data. A fraud detection system analyzes transactions against known patterns. A recommendation engine suggests products based on purchase history. These systems are powerful but fundamentally reactive and narrow in scope.

Generative AI introduces a qualitatively different capability: the ability to create new content, synthesize information across domains, and engage in natural language interactions that feel genuinely conversational. Large language models can draft reports, generate code, summarize complex documents, and provide explanations tailored to specific contexts.

Autonomous agents take this further by adding goal-directed behavior, multi-step reasoning, and the ability to use tools and APIs independently. An autonomous agent doesn't just answer questions—it can break down complex tasks, determine what information or actions are needed, execute multiple steps, and adapt its approach based on intermediate results. These systems can operate with varying degrees of human oversight, from fully supervised to semi-autonomous to fully autonomous within defined parameters.

The implications for enterprise operations are profound. Knowledge work that previously required extensive human coordination can now be partially or fully automated. Complex research tasks that took days can be completed in hours. Customer service operations can handle increasingly sophisticated inquiries without human intervention.

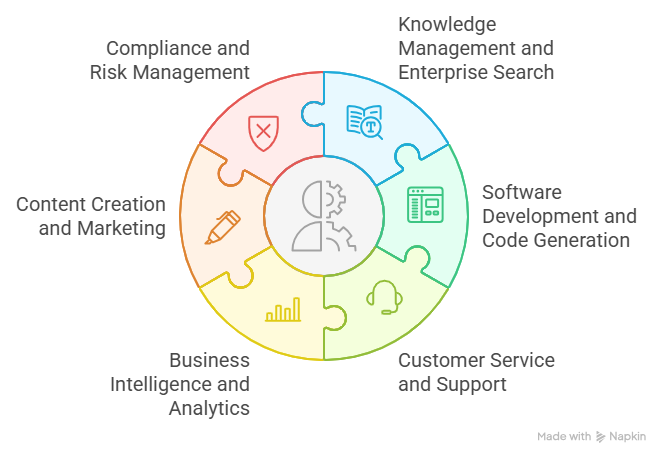

3. Key Enterprise Applications of Generative Agents

Enterprises are deploying generative AI and autonomous agents across diverse use cases, each offering distinct value propositions:

Knowledge Management and Enterprise Search: Autonomous agents can navigate vast repositories of internal documentation, extract relevant information, synthesize insights from multiple sources, and present findings in accessible formats. Unlike traditional search systems that return documents, these agents understand context and intent, delivering precise answers with supporting evidence.

Software Development and Code Generation: Generative AI systems are accelerating software development cycles by generating boilerplate code, suggesting optimizations, identifying bugs, and even architecting system components. Autonomous coding agents can understand requirements, write tests, implement features, and iterate based on feedback—all while adhering to enterprise coding standards and security protocols.

Customer Service and Support: Advanced conversational agents handle tier-one and tier-two support inquiries, resolving issues through natural dialogue, accessing backend systems, and escalating to human agents only when necessary. These systems learn from interactions, improving their responses over time while maintaining consistent service quality.

Business Intelligence and Analytics: Generative agents can query databases, perform analyses, generate visualizations, and explain findings in plain language. Decision-makers can ask complex business questions and receive actionable insights without requiring technical expertise in data analysis or visualization tools.

Content Creation and Marketing: From generating product descriptions and marketing copy to creating personalized email campaigns and social media content, generative AI enables marketing teams to scale content production while maintaining brand consistency and quality.

Compliance and Risk Management: Autonomous agents can monitor regulatory changes, assess compliance gaps, generate required documentation, and flag potential risks across operations—providing continuous oversight that would be impractical with manual processes alone.

4. Comparison: Traditional AI vs Agentic AI

Understanding the distinctions between traditional AI systems and modern generative and agentic approaches is essential for strategic planning:

This comparison reveals both the expanded capabilities and the heightened governance requirements of generative and agentic systems. While they offer unprecedented flexibility and power, they also introduce new challenges around predictability, control, and oversight.

5. Opportunities for Enterprises

The strategic value of generative AI and autonomous agents manifests across several dimensions:

5.1 Operational Efficiency and Cost Optimization

Autonomous agents can handle repetitive knowledge work at scale, freeing human workers to focus on higher-value activities requiring creativity, judgment, and interpersonal skills. Early adopters report significant reductions in time spent on routine tasks such as data entry, report generation, and initial customer inquiries.

Organizations implementing generative AI for code generation have observed development cycle reductions of 20-35%, with developers spending less time on boilerplate code and more time on architectural decisions and complex problem-solving. Similarly, customer service organizations deploying autonomous agents have achieved resolution time improvements while reducing costs per interaction.

5.2 Knowledge Automation and Institutional Memory

Enterprises accumulate vast amounts of institutional knowledge embedded in documents, emails, databases, and employee expertise. Generative agents can extract, organize, and make this knowledge accessible across the organization. New employees can quickly access relevant information without navigating complex systems or waiting for colleagues to respond. Strategic decisions can be informed by comprehensive analysis of historical data and outcomes.

This capability addresses a persistent challenge: the loss of institutional knowledge through employee turnover and the difficulty of scaling expertise across growing organizations.

5.3 Enhanced Decision Intelligence

Generative AI systems can process and synthesize information from diverse sources faster and more comprehensively than human analysts working alone. They can identify patterns across datasets, generate scenario analyses, and present decision-makers with well-reasoned recommendations supported by evidence.

Autonomous agents can continuously monitor business metrics, competitive intelligence, and market conditions, alerting decision-makers to emerging opportunities and threats. This creates a more proactive and responsive strategic posture.

5.4 Personalization at Scale

Generative AI enables personalized experiences across customer and employee interactions without the cost structure of one-to-one human engagement. Marketing messages, product recommendations, learning paths, and support interactions can be tailored to individual contexts and preferences while maintaining consistency with enterprise standards and values.

5.5 Innovation Acceleration

By automating routine aspects of research, analysis, and prototyping, generative agents allow innovation teams to iterate faster and explore more possibilities. Scientists can generate hypotheses and design experiments more rapidly. Product teams can prototype features and gather feedback in compressed timeframes. Strategic planners can model multiple scenarios and stress-test assumptions efficiently.

6. Risks and Governance Challenges

The powerful capabilities of generative AI and autonomous agents come with significant risks that must be actively managed:

6.1 Hallucination and Information Accuracy

Generative AI systems can produce confident-sounding outputs that are factually incorrect or entirely fabricated—a phenomenon known as hallucination. In enterprise contexts where decisions may have legal, financial, or safety implications, hallucinations present serious risks.

Autonomous agents acting on hallucinated information can cascade errors through multiple systems before detection. A customer service agent providing incorrect product information could trigger regulatory violations. A coding agent introducing security vulnerabilities could expose systems to attack.

6.2 Bias and Fairness

Like all AI systems, generative models reflect biases present in their training data. These biases can manifest in generated content, recommendations, and decisions. In enterprise applications affecting hiring, lending, promotion, or customer treatment, such biases may violate anti-discrimination laws and ethical standards.

Autonomous agents may amplify biases by acting on them repeatedly and at scale. Without careful monitoring, an organization could discover that its AI systems have been systematically disadvantaging certain groups.

6.3 Privacy and Data Security

Generative AI systems often require access to sensitive enterprise data to provide useful outputs. This creates risks around data leakage, unauthorized access, and compliance with privacy regulations. Employees may inadvertently share confidential information with cloud-based AI systems without proper safeguards.

Autonomous agents with broad access to enterprise systems could be exploited if compromised, potentially exposing intellectual property, customer data, or financial information.

6.4 Loss of Control and Accountability

As autonomous agents operate with increasing independence, establishing clear accountability for their actions becomes challenging. When an agent makes a decision that causes harm, determining responsibility—between the agent's developer, the enterprise deploying it, the employee who set its parameters, or the agent itself—raises complex legal and ethical questions.

The opacity of many generative AI systems compounds this challenge. Understanding why a particular output was generated or decision made may be difficult or impossible with current explainability techniques.

6.5 Regulatory and Compliance Uncertainty

The regulatory landscape for AI is evolving rapidly but remains fragmented and uncertain. The EU AI Act, proposed US federal legislation, and various state and industry-specific regulations create a complex compliance environment. Enterprises must navigate requirements around transparency, human oversight, risk assessment, and documentation while regulations continue to develop.

Autonomous agents operating across jurisdictions or in regulated industries face particularly acute compliance challenges.

6.6 Dependence and Skills Degradation

Over-reliance on AI systems may lead to organizational skills degradation. If employees routinely defer to AI outputs without developing independent judgment, the organization's capacity to function without those systems—or to critically evaluate their outputs—may erode over time.

This creates strategic vulnerability. System failures, capability limitations in novel situations, or adversarial attacks could leave organizations unable to maintain operations.

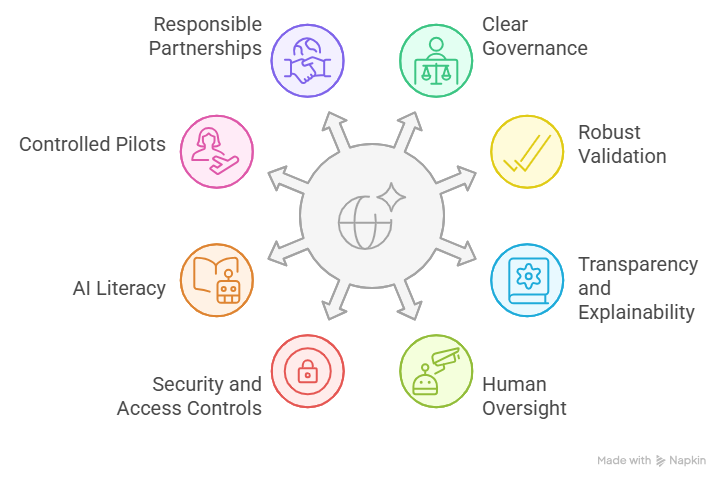

7. Best Practices for Responsible Adoption

Enterprises can capture the opportunities of generative AI and autonomous agents while managing risks through disciplined implementation practices:

7.1 Establish Clear Governance Frameworks

Effective AI governance begins with explicit policies defining appropriate use cases, risk tolerance, oversight requirements, and accountability structures. Governance frameworks should specify:

- Which decisions or tasks can be fully automated versus requiring human review

- Data access permissions and privacy protections for AI systems

- Performance standards and monitoring requirements

- Escalation procedures when systems encounter edge cases or generate uncertain outputs

- Regular audit and assessment schedules

Cross-functional governance committees including technical leaders, legal counsel, compliance officers, and business stakeholders ensure comprehensive risk assessment and appropriate guardrails.

7.2 Implement Robust Validation and Testing

Before deploying generative AI or autonomous agents in production environments, enterprises should conduct extensive validation:

- Accuracy testing: Verify outputs against ground truth across diverse scenarios

- Bias assessment: Evaluate system behavior across demographic groups and sensitive attributes

- Stress testing: Identify failure modes under unusual or adversarial inputs

- Security review: Assess vulnerabilities to prompt injection, data extraction, and other attacks

- Integration testing: Ensure proper interaction with existing enterprise systems

Validation should be continuous rather than one-time, with ongoing monitoring to detect performance degradation or emerging issues.

7.3 Design for Transparency and Explainability

While perfect explainability may not be achievable with current technology, enterprises can improve transparency through:

- Logging all AI-generated outputs and the inputs that produced them

- Implementing confidence scoring to indicate output reliability

- Providing reasoning traces showing how agents arrived at decisions

- Maintaining clear documentation of training data, model versions, and configuration parameters

- Creating mechanisms for users to request explanations and challenge outputs

Transparency builds trust and enables effective oversight and debugging when issues arise.

7.4 Maintain Human-in-the-Loop Oversight

Critical decisions should retain meaningful human involvement. This doesn't mean humans must approve every action—which would negate efficiency gains—but rather that systems should be designed with appropriate checkpoints:

- Supervised mode: Human reviews and approves all significant actions

- Semi-autonomous mode: Agent operates independently within defined parameters; humans review exceptions and periodically audit decisions

- Autonomous mode with oversight: Agent operates independently; humans monitor dashboards and investigate anomalies

The appropriate oversight level depends on decision impact, system maturity, and risk tolerance.

7.5 Invest in Security and Access Controls

Protecting AI systems requires defense-in-depth approaches:

- Implement strict access controls limiting which users and systems can interact with AI capabilities

- Encrypt sensitive data both in transit and at rest

- Use API gateways to monitor and filter requests to AI systems

- Employ prompt filtering to detect and block malicious inputs

- Maintain separate environments for development, testing, and production

- Regularly update systems to patch vulnerabilities

For autonomous agents with broad system access, implement least-privilege principles, granting only the minimum permissions necessary for their functions.

7.6 Build AI Literacy Across the Organization

Effective use of generative AI requires organizational understanding of both its capabilities and limitations. Training programs should help employees:

- Recognize when AI systems are appropriate versus when human judgment is essential

- Craft effective prompts and interact productively with AI systems

- Critically evaluate AI outputs rather than accepting them uncritically

- Identify potential biases, errors, or inappropriate content

- Understand privacy and security implications of sharing information with AI

AI literacy transforms employees from passive consumers to informed partners in human-AI collaboration.

7.7 Start with Controlled Pilots and Scale Gradually

Rather than enterprise-wide deployments, begin with focused pilot projects in controlled environments. Select use cases with clear success metrics, manageable risk profiles, and engaged stakeholders. Learn from pilot experiences before expanding:

- Document what worked, what didn't, and why

- Refine governance processes based on real-world challenges

- Build organizational confidence and capability incrementally

- Demonstrate value to secure broader support and resources

Gradualism reduces risk while building the institutional knowledge necessary for successful large-scale implementation.

7.8 Partner with Responsible Vendors

When procuring generative AI solutions, evaluate vendors on governance and responsibility practices:

- Transparency about training data, model limitations, and potential biases

- Security certifications and compliance with relevant standards

- Commitment to ongoing monitoring, updates, and support

- Clear contractual terms around liability, data usage, and intellectual property

- Alignment with enterprise values and ethical principles

Strong vendor partnerships ensure access to expertise and share responsibility for successful outcomes.

8.The Future of Generative AI in Autonomous Enterprise Systems

The trajectory of generative AI and autonomous agents suggests several emerging trends that will shape enterprise technology over the coming years:

Multi-Agent Collaboration: Rather than single agents operating in isolation, future systems will feature specialized agents collaborating to accomplish complex tasks. A research project might involve agents for literature review, data analysis, visualization, and writing working together under human direction.

Improved Reasoning and Planning: Current limitations in complex reasoning and long-horizon planning will diminish as models improve and agent architectures mature. Autonomous systems will handle increasingly sophisticated workflows requiring multi-step planning, resource allocation, and adaptation to changing conditions.

Enhanced Personalization and Context: Agents will maintain richer contextual understanding of individual users, organizational dynamics, and domain-specific knowledge. This will enable more nuanced, effective interactions and decision-making aligned with enterprise culture and values.

Tighter Integration with Enterprise Architecture: Generative AI capabilities will become embedded throughout enterprise systems rather than existing as standalone tools. Customer relationship management, enterprise resource planning, and business intelligence platforms will incorporate native generative and agentic features.

Regulatory Maturity and Standardization: As regulatory frameworks solidify and industry standards emerge, enterprises will have clearer guidance on compliance requirements. This will reduce uncertainty and enable more confident investment in AI capabilities.

Hybrid Intelligence Models: The most successful enterprises will excel at human-AI collaboration, designing workflows that leverage the complementary strengths of human judgment and AI capabilities. Organizational structures, incentive systems, and cultures will evolve to support this hybrid intelligence.

The enterprise that thrives in this emerging landscape will be one that approaches generative AI and autonomous agents with both ambition and discipline—eager to capture opportunities while rigorously managing risks through strong governance, continuous learning, and unwavering ethical commitment.

9. Conclusion

Generative AI and autonomous agents represent a fundamental shift in enterprise technology capabilities. The opportunities for efficiency, innovation, and enhanced decision-making are substantial and supported by growing evidence from early adopters. Organizations that successfully harness these technologies will gain significant competitive advantages in operational excellence, customer experience, and strategic agility.

However, these opportunities come with meaningful risks around accuracy, bias, privacy, control, and compliance. The enterprises that will succeed are not those that deploy AI fastest, but those that deploy it most responsibly—with robust governance frameworks, transparent operations, appropriate human oversight, and continuous attention to emerging challenges.

The question is no longer whether enterprises should adopt generative AI and autonomous agents, but how to do so in ways that create lasting value while protecting stakeholders and upholding ethical principles. This requires leadership commitment, cross-functional collaboration, investment in capabilities and culture, and the humility to learn from both successes and failures.

The future of enterprise AI is not about replacing human intelligence but augmenting it—creating organizations where humans and AI systems work together effectively, each contributing their unique strengths to achieve outcomes neither could accomplish alone. Building this future demands technical excellence, strategic vision, and moral clarity in equal measure.

10. Frequently Asked Questions

Q: What is the difference between generative AI and autonomous agents?

Generative AI refers to systems that can create new content—text, code, images, or other outputs—based on patterns learned from training data. Autonomous agents are AI systems that can independently pursue goals by planning multi-step actions, using tools, and adapting to feedback. While these concepts overlap (autonomous agents often use generative AI capabilities), not all generative AI systems are autonomous agents. A simple text generator that responds to prompts is generative AI but not an autonomous agent, while an agent that can independently research a topic, write a report, and email it to stakeholders demonstrates autonomy.

Q: How can enterprises address the hallucination problem in generative AI?

Addressing hallucinations requires multiple complementary strategies: implementing retrieval-augmented generation (RAG) to ground outputs in verified information sources; using confidence scoring to flag uncertain outputs for human review; establishing validation workflows where critical outputs are checked against authoritative sources; training employees to critically evaluate AI outputs rather than accepting them uncritically; and maintaining transparency about system limitations. No single approach eliminates hallucinations entirely, but layered defenses significantly reduce risk.

Q: What regulatory compliance considerations are most critical for enterprise AI?

Key compliance considerations include data privacy regulations (GDPR, CCPA, sector-specific rules), anti-discrimination and fairness requirements in employment and consumer decisions, intellectual property protection, transparency and explainability mandates, industry-specific regulations (financial services, healthcare, etc.), and emerging AI-specific legislation. Enterprises should conduct regular compliance assessments, maintain documentation of AI system design and deployment decisions, implement appropriate oversight mechanisms, and stay informed about evolving regulatory requirements. Consulting with legal counsel experienced in AI governance is advisable.

Q: How should enterprises balance AI automation with preserving human jobs and skills?

Successful AI adoption reframes the question from replacement to augmentation. Rather than automating entire roles, focus on automating specific tasks to enhance human productivity and enable employees to focus on higher-value work. Invest in reskilling programs that help employees develop competencies in working alongside AI systems, managing AI outputs, and focusing on uniquely human capabilities like creativity, empathy, and complex judgment. Design workflows that maintain human involvement in meaningful ways rather than reducing humans to AI supervisors. Treat AI adoption as a change management challenge requiring communication, involvement, and attention to workforce concerns.

Q: What are the key metrics for measuring success with generative AI and autonomous agents?

Success metrics should align with specific business objectives but typically include: efficiency gains (time savings, cost reduction, throughput increases); quality improvements (accuracy rates, error reduction, customer satisfaction); scalability measures (volume of work processed, response times); adoption metrics (user engagement, integration with existing workflows); risk metrics (compliance incidents, security breaches, bias issues); and business impact (revenue effects, competitive positioning, innovation acceleration). Importantly, track not just positive outcomes but also incidents, errors, and user concerns to identify areas requiring improvement. Balanced scorecards that capture both opportunities and risks provide the most complete picture of AI value.