Table Of Contents

1. Introduction: The Circulatory System of Intelligent Enterprises

In biological systems, the circulatory system ensures that oxygen, nutrients, and vital information reach every cell in the organism. Without this continuous flow, tissues atrophy and organs fail. The modern AI enterprise operates under a similar imperative: intelligent systems require constant access to unified, contextualized data flowing seamlessly across all operational domains.

Data fabric and knowledge graphs serve as the circulatory system of the AI Operating System. Where traditional data architectures resemble disconnected reservoirs requiring manual integration, data fabric establishes intelligent pathways that automatically discover, connect, and deliver data across hybrid and multi-cloud environments. Knowledge graphs layer semantic understanding atop this infrastructure, transforming raw data streams into contextually rich information networks that AI models can interpret and act upon.

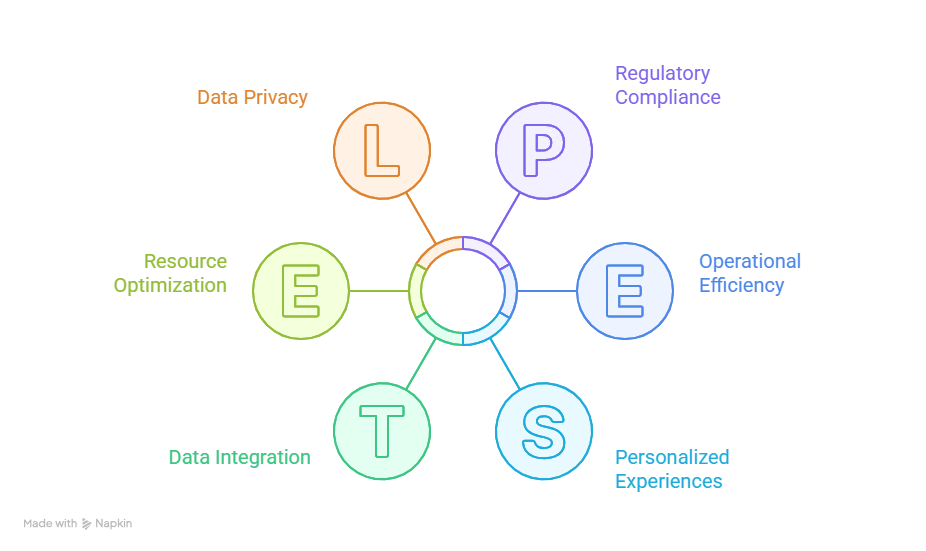

This architectural paradigm shift addresses a fundamental challenge facing enterprises: the proliferation of data silos across disparate systems, cloud platforms, and operational units has created fragmentation that undermines the promise of enterprise AI. Without a unified data circulatory system, AI initiatives remain isolated experiments rather than transformative organizational capabilities. Data fabric and knowledge graphs provide the connective tissue that transforms fragmented data landscapes into intelligent, self-aware information ecosystems capable of powering next-generation AI applications.

2. Data Fabric as the Enterprise Neural Network

Data fabric represents an architectural approach that creates an integrated layer of data and connecting processes across distributed environments. Unlike traditional data integration methods that require manual pipeline construction and maintenance, data fabric employs metadata-driven automation, active metadata management, and embedded machine learning to create self-configuring data delivery networks.

The fundamental distinction between data fabric and conventional architectures lies in its approach to integration. Traditional extract-transform-load (ETL) processes create rigid, point-to-point connections that must be manually reconfigured as systems evolve. Data fabric architectures implement continuous analysis of metadata patterns, usage behaviors, and data lineage to automatically identify optimal data paths and transformation requirements. This intelligent orchestration reduces integration complexity by approximately 70 percent while improving data availability and reducing time-to-insight.

According to Gartner's analysis, organizations implementing data fabric architectures can reduce the time for integration design by 30 percent, deployment by 30 percent, and maintenance by 70 percent. These efficiency gains translate directly into organizational agility, enabling enterprises to adapt data infrastructure at the pace of business change rather than technical constraints.

Data fabric implementations leverage several core capabilities that distinguish them from legacy approaches. Active metadata management continuously captures technical, operational, and semantic metadata across all data assets, creating a comprehensive understanding of data location, quality, lineage, and usage patterns. Knowledge graph technology structures this metadata into navigable relationship networks that enable intelligent discovery and automated integration. Machine learning algorithms analyze historical integration patterns and usage behaviors to recommend optimal data pipelines and proactively identify quality issues before they impact downstream applications.

The architectural implications extend beyond technical efficiency. Data fabric enables organizations to implement true data democratization by abstracting underlying complexity and presenting consumers with simplified, semantically meaningful data access interfaces. Business analysts, data scientists, and AI applications interact with unified data views regardless of whether underlying sources reside in on-premises databases, cloud data lakes, or software-as-a-service applications. This abstraction layer maintains governance controls while eliminating technical barriers that traditionally restricted data access to specialized integration teams.

3. Knowledge Graphs as Semantic Intelligence Layers

While data fabric establishes the infrastructure for unified data access, knowledge graphs provide the semantic intelligence that transforms data into actionable context. A knowledge graph represents information as an interconnected network of entities, attributes, and relationships, creating machine-readable representations of domain knowledge that enable AI systems to reason about data rather than merely process it.

The architectural distinction between traditional relational databases and knowledge graphs reflects fundamentally different approaches to information modeling. Relational systems organize data into predefined tables optimized for transactional efficiency, requiring developers to anticipate all possible query patterns during schema design. Knowledge graphs model information as flexible networks where new entities and relationships can be added without restructuring existing data. This flexibility proves essential in AI applications where requirements evolve continuously and systems must integrate information from previously unknown sources.

Knowledge graphs employ semantic technologies including Resource Description Framework (RDF), Web Ontology Language (OWL), and SPARQL query language to create standards-based representations that facilitate interoperability across organizational boundaries. These standards enable knowledge graphs to integrate diverse vocabularies, taxonomies, and ontologies into unified semantic models that capture enterprise-specific terminology while maintaining compatibility with industry standards and external data sources.

IBM's enterprise knowledge graph implementations demonstrate how semantic technologies enable sophisticated AI applications across industries. Financial services organizations employ knowledge graphs to model complex regulatory frameworks, customer relationships, and transaction patterns, enabling compliance systems to automatically identify reportable activities across disparate business units. Healthcare enterprises use knowledge graphs to integrate clinical data, research findings, and treatment protocols, supporting diagnostic AI systems that reason across patient histories, genomic data, and medical literature.

The value proposition extends beyond individual applications to systemic organizational intelligence. Knowledge graphs serve as the semantic foundation for enterprise AI by providing shared conceptual models that ensure consistency across analytical initiatives. When multiple AI systems reference common knowledge graph representations of customers, products, or operational concepts, their outputs become inherently interoperable. This semantic alignment enables composite AI applications that orchestrate multiple specialized models into cohesive analytical workflows.

Knowledge graph construction requires careful attention to ontology design, entity resolution, and relationship extraction. Ontologies define the conceptual frameworks that structure graph information, establishing entity types, relationship types, and semantic rules that govern graph structure. Entity resolution identifies when different data sources reference identical real-world entities despite using different identifiers or representations. Relationship extraction employs natural language processing and machine learning to discover implicit connections within unstructured content, transforming documents and communications into structured graph relationships.

4. Enterprise Adoption and Digital Transformation Impact

The convergence of data fabric and knowledge graph technologies has accelerated significantly as enterprises recognize their necessity for AI-driven transformation. Market analysis reveals substantial momentum behind these architectural approaches as organizations seek to overcome data fragmentation challenges that impede AI initiatives.

Deloitte's survey of enterprise data architecture trends identifies data fabric and knowledge graph adoption as critical enablers of digital transformation, with organizations implementing these technologies reporting substantial improvements in data accessibility, quality, and time-to-insight. Enterprises that successfully deployed integrated data fabric and knowledge graph architectures reduced analytical development cycles by 40 to 60 percent while improving model accuracy through enhanced data quality and contextual enrichment.

Financial services organizations have emerged as particularly aggressive adopters, driven by regulatory requirements for comprehensive data lineage and the competitive imperative to deliver personalized customer experiences. Global banks employ knowledge graphs encompassing hundreds of millions of entities representing customers, accounts, transactions, products, and regulatory concepts. These semantic networks enable real-time risk assessment, fraud detection, and regulatory reporting across previously siloed business lines and geographic regions.

Manufacturing enterprises leverage data fabric architectures to unify operational technology (OT) data from production systems with enterprise resource planning and supply chain management systems. Knowledge graphs model complex product configurations, supplier relationships, and manufacturing processes, enabling AI-driven optimization of production scheduling, quality control, and predictive maintenance. The ability to reason across design specifications, production data, and field performance creates closed-loop improvement cycles that continuously enhance operational efficiency.

Healthcare organizations face particularly acute data integration challenges due to fragmented electronic health records, diverse diagnostic systems, and siloed research databases. Knowledge graphs that model clinical concepts, patient relationships, and treatment protocols enable AI applications to synthesize information across disparate sources, supporting clinical decision support, drug discovery, and population health management. The semantic richness of graph representations proves essential for healthcare AI systems that must reason about complex medical concepts and uncertain clinical information.

The adoption trajectory suggests that data fabric and knowledge graph technologies are transitioning from emerging innovations to architectural imperatives. Organizations that delay implementation risk accumulating technical debt as data fragmentation intensifies with continued system proliferation and cloud migration. Early adopters are establishing competitive advantages through superior data accessibility, analytical agility, and AI capability that prove difficult for competitors to replicate without comparable foundational investments.

5. Integration with Real-Time Intelligence and AI-Native Architecture

Data fabric and knowledge graphs do not exist in isolation but rather form foundational layers within comprehensive AI Operating Systems. Their integration with real-time intelligence dashboards and AI-native enterprise architecture creates synergistic capabilities that transcend the sum of individual components.

Real-time intelligence dashboards depend fundamentally on data fabric capabilities to aggregate streaming information from operational systems, IoT sensors, transaction processors, and external data feeds. The automated integration and continuous metadata management that characterize data fabric architectures enable dashboards to maintain current views across dynamic data landscapes without manual pipeline reconfiguration. Knowledge graphs enhance dashboard intelligence by providing semantic context that enables dynamic drill-down analysis, automated anomaly explanation, and intelligent alerting based on relationship patterns rather than simple threshold violations.

The architectural integration extends to AI model deployment and orchestration. AI-native architectures employ data fabric infrastructure to provide models with consistent access to training data, feature stores, and operational data sources regardless of underlying storage technologies or locations. Knowledge graphs serve as feature stores that provide models with semantically enriched input features, improving model performance by incorporating explicit relationship information that would otherwise require models to learn implicitly from training data.

Model governance and explainability benefit substantially from knowledge graph integration. When AI models consume semantically annotated features derived from knowledge graphs, their decision processes can be traced back through explicit relationship chains to source data and business concepts. This traceability proves essential for regulated industries where model decisions must be explainable to auditors and customers. Knowledge graphs effectively create audit trails that document not merely what data informed model decisions but why that data proved relevant within the semantic context of the business problem.

The combination enables sophisticated use cases that would prove impractical with traditional architectures. Conversational AI assistants leverage knowledge graphs to understand user intent within organizational context, accessing relevant information through data fabric infrastructure and formulating responses that reflect enterprise-specific terminology and relationships. Recommendation systems employ graph traversal algorithms to identify non-obvious product affinities and customer segments, delivering personalized experiences based on relationship patterns rather than simple collaborative filtering.

Operational AI applications that must respond to rapidly changing conditions particularly benefit from this architectural integration. Supply chain optimization systems employ real-time data fabric feeds to monitor inventory levels, shipment status, and demand signals while leveraging knowledge graphs to reason about supplier capabilities, product substitutability, and logistics constraints. This combination enables dynamic replanning that adapts to disruptions while respecting complex business rules and relationships that simple optimization algorithms cannot accommodate.

6. Navigating Implementation Challenges

Despite their transformative potential, data fabric and knowledge graph implementations confront substantial challenges that organizations must address systematically to achieve successful outcomes. These challenges span technical, organizational, and governance domains, requiring coordinated strategies that extend beyond technology deployment.

Data silos represent the most visible impediment to data fabric implementation. Legacy systems designed for departmental autonomy often lack APIs, employ proprietary data formats, or maintain inconsistent semantic models that resist integration. Overcoming these barriers requires significant investment in data extraction, transformation, and semantic alignment. Organizations must balance the comprehensive vision of unified data access against pragmatic realities of legacy system constraints and the resource requirements for comprehensive integration.

Governance frameworks must evolve to accommodate distributed data fabric architectures while maintaining necessary controls around access, quality, and compliance. Traditional governance approaches that rely on centralized data warehouses and manual approval processes prove incompatible with data fabric principles of distributed access and automated integration. Organizations require governance architectures that embed controls within fabric infrastructure, employing automated policy enforcement, continuous data quality monitoring, and audit logging that operates across distributed environments.

Knowledge graph construction presents particular challenges around ontology design and maintenance. Developing conceptual models that accurately represent complex business domains while remaining sufficiently flexible to accommodate evolution requires deep domain expertise and stakeholder collaboration. Organizations often underestimate the effort required for entity resolution and relationship extraction, particularly when integrating unstructured content that requires natural language processing and machine learning techniques.

Skill gaps impede implementation across multiple dimensions. Data fabric architectures require expertise in distributed systems, metadata management, and integration technologies that remain scarce in many organizations. Knowledge graph development demands skills in semantic technologies, ontology engineering, and graph algorithms that few data professionals possess. Organizations must invest in training existing staff, recruiting specialized talent, and establishing partnerships with technology providers and system integrators to address capability gaps.

Cultural resistance frequently undermines technical implementations when stakeholders perceive data fabric initiatives as threatening departmental autonomy or imposing unwelcome transparency. Organizations must approach data fabric deployment as organizational transformation rather than purely technical projects, investing in change management, stakeholder engagement, and demonstrating tangible value through focused use cases that build organizational confidence and momentum.

Interoperability with existing investments presents practical challenges that influence implementation approaches. Organizations must determine appropriate integration strategies for legacy data warehouses, data lakes, and analytical platforms, balancing the benefits of comprehensive fabric coverage against risks of disrupting functioning systems. Phased implementations that establish fabric capabilities for new use cases while gradually extending coverage to legacy systems often prove more successful than disruptive rip-and-replace approaches.

7. Conclusion: Foundation for Scalable AI Operations

Data fabric and knowledge graphs represent not merely incremental improvements to data management but fundamental architectural shifts that enable enterprise AI at scale. Their role as the circulatory system of the AI Operating System proves essential for organizations seeking to transcend isolated AI experiments and achieve systemic intelligent automation.

The architectural paradigm they establish—unified yet distributed data access combined with semantic intelligence—resolves tensions that have constrained enterprise data strategies for decades. Organizations need not choose between centralized control and operational autonomy, between data consistency and flexibility, or between comprehensive integration and practical implementation timelines. Data fabric and knowledge graphs provide frameworks that accommodate these tensions through intelligent automation, semantic abstraction, and gradual evolution.

The competitive implications extend beyond operational efficiency to strategic positioning. Organizations that successfully implement these foundational technologies establish advantages that compound over time as AI capabilities expand and data volumes increase. The infrastructure they create becomes progressively more valuable as knowledge graphs capture accumulated organizational intelligence and data fabric automation learns from expanding usage patterns.

As AI transitions from experimental technology to operational necessity, the quality of underlying data infrastructure increasingly determines organizational capability. Data fabric and knowledge graphs transform data from static resources requiring manual processing into active, intelligent systems that continuously adapt to organizational needs. This transformation proves essential for enterprises seeking to operate at the speed and scale demanded by AI-driven markets.

8. Frequently Asked Questions

What distinguishes data fabric from traditional data integration approaches?

Data fabric employs active metadata management and embedded machine learning to create self-configuring integration networks that automatically discover, connect, and deliver data across distributed environments. Unlike traditional ETL pipelines that require manual construction and maintenance, data fabric architectures analyze metadata patterns and usage behaviors to automatically identify optimal data paths and transformation requirements. This intelligent automation reduces integration design time by 30 percent, deployment time by 30 percent, and maintenance effort by 70 percent compared to conventional approaches.

How do knowledge graphs enhance enterprise AI applications?

Knowledge graphs transform data into semantically rich information networks that enable AI systems to reason about relationships and context rather than merely processing isolated data points. By representing information as interconnected entities and relationships, knowledge graphs provide AI models with explicit domain knowledge that improves accuracy, enables explainability, and facilitates integration across multiple AI systems. They serve as shared semantic foundations that ensure consistency across analytical initiatives and enable composite AI applications that orchestrate specialized models into cohesive workflows.

What are the primary challenges organizations face when implementing data fabric architectures?

Implementation challenges span technical, organizational, and governance domains. Technical obstacles include integrating legacy systems that lack modern APIs or employ proprietary formats, requiring significant extraction and transformation investments. Governance frameworks must evolve to embed automated controls within distributed fabric infrastructure while maintaining compliance and quality standards. Organizations face skill gaps in distributed systems, metadata management, and semantic technologies that require training, recruitment, or partnerships. Cultural resistance emerges when stakeholders perceive fabric initiatives as threatening departmental autonomy, necessitating change management and stakeholder engagement strategies.

How do data fabric and knowledge graphs support real-time intelligence requirements?

Data fabric architectures employ automated integration and continuous metadata management to aggregate streaming information from operational systems, IoT sensors, and external feeds without manual pipeline reconfiguration. Knowledge graphs enhance real-time intelligence by providing semantic context that enables dynamic analysis, automated anomaly explanation, and intelligent alerting based on relationship patterns. This combination supports operational AI applications that must respond to rapidly changing conditions while reasoning about complex business rules and relationships that simple algorithms cannot accommodate.