Table Of Contents

1. Introduction: The Infrastructure Imperative

Enterprise digital transformation has reached an inflection point. Organizations are no longer asking whether to adopt artificial intelligence but rather how fundamentally to restructure their technology foundations to support intelligence-first operations. The scale of this transition is visible in capital allocation patterns across the technology industry.

Global spending on data center hardware and software reached an all-time high in 2024, growing 34% year-over-year to $282 billion, driven predominantly by AI infrastructure investments. More remarkably, spending on cloud infrastructure increased 99.3% year-over-year in the fourth quarter of 2024 to $67.0 billion, with accelerated server deployments for AI workloads accounting for the majority of growth.

The four largest technology companies—Alphabet, Amazon, Meta, and Microsoft—collectively planned approximately $315 billion in capital spending in 2025, primarily directed toward AI and cloud infrastructure. This represents the largest coordinated infrastructure investment in technology history, surpassing previous buildouts for cloud computing, mobile networks, and internet backbone infrastructure combined.

These investments reflect a fundamental recognition: competitive advantage in the next decade will accrue to organizations that embed intelligence into their operational DNA rather than those that bolt AI capabilities onto legacy architectures. The distinction between AI-enabled and AI-native organizations will be as consequential as the divide between digital and analog enterprises proved in previous decades.

Generative AI adoption jumped from 55% in 2023 to 75% in 2024, but adoption rates mask deeper questions about implementation quality. Organizations report average ROI of 3.7x on generative AI investments, yet top performers realize returns of 10.3x—a threefold difference that correlates strongly with architectural maturity. The organizations achieving superior returns have implemented AI-native architectures that treat intelligence as infrastructure rather than feature additions to existing systems.

This analysis examines the defining characteristics of AI-native enterprise architecture, dissects its core components, evaluates implementation models, and projects its evolution through 2030. The strategic imperative is clear: enterprises must reimagine their technology foundations to compete in an intelligence-driven economy.

2. Defining AI-Native Architecture: Beyond Augmentation to Foundation

AI-native architecture represents a categorical departure from conventional approaches to enterprise AI adoption. Understanding this distinction requires clarity about three different paradigms that organizations frequently conflate.

2.1 AI-Enabled vs AI-Augmented vs AI-Native Systems

AI-Enabled Systems represent the first-generation approach to enterprise AI adoption. These implementations add machine learning capabilities to existing applications without modifying underlying architectures. A traditional ERP system that incorporates a recommendation engine or a CRM platform that adds predictive lead scoring exemplifies this approach. The AI functionality operates as a feature layer atop conventional software, consuming data from relational databases designed for transactional processing rather than analytical workloads.

AI-enabled systems suffer from architectural impedance mismatches. Data pipelines built for batch processing struggle to support real-time inference. Monolithic application architectures create bottlenecks when model serving requires independent scaling. Security models designed for human access control fail to address model governance requirements. Organizations implementing AI-enabled systems frequently encounter these limitations as AI adoption expands beyond pilot projects.

AI-Augmented Systems represent an intermediate evolutionary stage where organizations refactor portions of their technology stack to better accommodate AI workloads while preserving core legacy architectures. This approach typically involves implementing data lakes or warehouses optimized for analytical queries, deploying separate model serving infrastructure, and creating API layers that mediate between traditional applications and ML services.

AI-augmented architectures reduce some constraints but introduce new complexities. Data must be replicated between transactional and analytical systems, creating synchronization challenges and increasing storage costs. Model serving infrastructure operates independently of application logic, requiring orchestration layers to coordinate interactions. Developers work across multiple technology stacks, increasing cognitive load and slowing development velocity.

AI-Native Systems fundamentally reconceive enterprise architecture with intelligence as a first-class design consideration from inception. These systems assume that every component—from data ingestion to user interfaces—will interact with machine learning models. Rather than retrofitting AI capabilities onto architectures designed for different purposes, AI-native systems optimize for the specific requirements of continuous learning, adaptive behavior, and automated decision-making.

The architectural implications are profound. Data platforms unify transactional and analytical processing, eliminating replication delays. Application logic incorporates model inference as a native operation rather than an external service call. Infrastructure automatically scales both compute and storage resources in response to model training and serving demands. Development workflows integrate model development, testing, and deployment as seamlessly as conventional code changes.

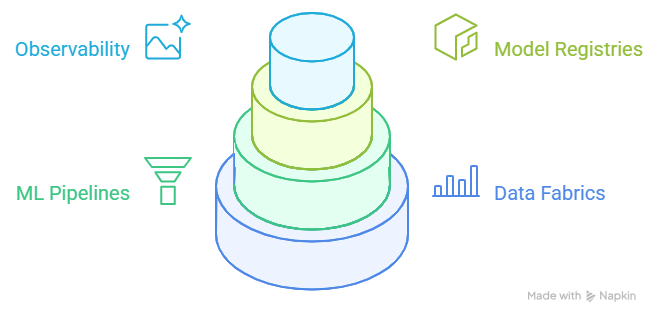

2.2 The Four Core Layers of AI-Native Architecture

AI-native architectures organize into four integrated layers, each addressing specific requirements while maintaining coherent interfaces with adjacent layers.

Data Layer: Unified Intelligence Foundation

The data layer provides the substrate upon which all intelligence operations depend. AI-native data platforms unify streaming and batch processing, operational and analytical workloads, structured and unstructured data formats within architectures that eliminate traditional boundaries between transactional databases, data warehouses, and data lakes.

Modern data fabrics implement this unification through distributed storage systems optimized for both low-latency transactional access and high-throughput analytical scans. Apache Iceberg, Delta Lake, and Apache Hudi provide table formats that support ACID transactions while enabling time-travel queries and schema evolution—capabilities essential for machine learning workflows that require reproducible training datasets and model versioning.

The data layer implements comprehensive lineage tracking that maps every derived dataset back to its source systems, transformation logic, and quality validations. This lineage becomes critical for model governance, enabling data scientists to understand precisely which data informed specific predictions and auditors to verify compliance with data usage policies.

Model Layer: Continuous Learning Infrastructure

The model layer encompasses all infrastructure supporting model development, training, validation, serving, and monitoring. AI-native architectures treat models as first-class artifacts with lifecycle management comparable to application code but with distinct requirements for versioning, testing, and deployment.

Feature stores provide centralized repositories for engineered features, enabling data scientists to discover and reuse transformations while ensuring consistency between training and serving environments. Offline stores maintain historical feature values for model training while online stores provide low-latency access during inference, abstracting the complexity of synchronizing these environments from model developers.

Experiment tracking systems record every model training run, capturing hyperparameters, training datasets, evaluation metrics, and artifact references. This comprehensive tracking enables reproducibility, supports model comparison, and provides the audit trails required in regulated industries.

Model registries serve as centralized catalogs for production models, maintaining metadata about model lineage, performance characteristics, deployment targets, and approval status. The registry mediates model promotion through development, staging, and production environments, enforcing governance policies and enabling rollback when deployed models underperform.

Orchestration Layer: Coordinating Intelligence Workflows

The orchestration layer coordinates the complex workflows that span data ingestion, feature engineering, model training, validation, deployment, and monitoring. These workflows involve heterogeneous compute resources—CPU clusters for data transformation, GPU instances for model training, specialized inference accelerators for serving—that must be provisioned, scheduled, and monitored across hybrid cloud environments.

Modern orchestration platforms including Apache Airflow, Kubeflow, and MLflow provide declarative workflow definitions that specify dependencies between tasks while delegating execution to underlying schedulers. These platforms implement retry logic, failure handling, and resource management, enabling data teams to focus on workflow logic rather than operational concerns.

The orchestration layer implements policy enforcement that ensures workflows comply with organizational standards. Data access controls restrict which systems can read sensitive information. Compute quotas prevent individual experiments from consuming excessive resources. Approval gates require human review before deploying models to production environments serving regulated decisions.

Application Layer: Intelligence-Infused Experiences

The application layer delivers AI capabilities to end users through interfaces that range from traditional web and mobile applications to conversational agents, augmented reality experiences, and autonomous systems. AI-native applications differ from conventional software by treating model inference as a core operation that influences every user interaction.

Rather than implementing business logic through deterministic code paths, AI-native applications delegate decisions to models that learn optimal behavior from data. A financial services application determines credit approval through models that continuously refine risk assessment based on repayment patterns rather than through static rule engines. A supply chain system optimizes routing through models that learn from historical delivery performance rather than through pre-programmed algorithms.

The application layer implements responsible AI patterns that maintain human oversight while leveraging automated intelligence. Explanability interfaces surface the factors that influenced model decisions, enabling users to understand and validate recommendations. Confidence thresholds route low-certainty predictions to human review rather than automating unreliable decisions. Feedback mechanisms capture user corrections that inform model retraining.

3. Architectural Components: Building Blocks of Intelligence

Implementing AI-native architecture requires integrating multiple specialized components that collectively provide the capabilities organizations need to develop, deploy, and operate intelligent systems at enterprise scale.

3.1 Data Fabrics and Unified Data Platforms

Data fabrics provide the foundational layer that makes data accessible, discoverable, and trustworthy across organizational boundaries. Unlike traditional data integration approaches that move data between systems, data fabrics create virtual access layers that enable applications and models to consume data from source systems without physical replication.

The fabric architecture implements several critical capabilities. Active metadata management continuously catalogs all data assets, tracking schemas, data quality metrics, access patterns, and lineage relationships. This metadata enables semantic search that helps users discover relevant datasets based on business terminology rather than technical system names.

Intelligent query optimization analyzes data access patterns and automatically caches frequently accessed datasets, creates materialized views for expensive aggregations, and pushes computation to systems where data resides to minimize network transfer. These optimizations occur transparently, improving performance without requiring application changes.

Policy-driven access control enforces data governance rules consistently across all consumption paths. Users and applications receive filtered views of data based on their authorization levels, geographic location, and intended use. Sensitive fields are automatically masked or tokenized based on context, enabling organizations to democratize data access while maintaining security and compliance.

3.2 ML Pipelines and Model Development Infrastructure

Machine learning pipelines automate the repetitive workflows involved in model development, enabling data scientists to iterate rapidly while maintaining reproducibility and quality standards. Modern ML pipeline frameworks provide abstractions that separate pipeline logic from execution environments, allowing the same pipeline definitions to run on laptops during development and distributed clusters during production training.

Pipeline components encapsulate discrete operations—data validation, feature engineering, model training, evaluation, deployment—with well-defined interfaces that specify input data schemas, configuration parameters, and output artifacts. This modularity enables teams to build libraries of reusable components while maintaining flexibility to customize pipelines for specific use cases.

Continuous training pipelines automate model retraining as new data arrives, implementing schedules that balance model freshness against computational cost. These pipelines incorporate automated quality gates that evaluate newly trained models against production baselines, deploying improved models while blocking regressions. Statistical tests detect data drift that invalidates existing models, triggering alerts that prompt investigation before prediction quality degrades.

3.3 Model Registries and Version Control

Model registries serve as the source of truth for production machine learning models, providing centralized catalogs that track model lineage, performance metrics, deployment status, and approval workflows. The registry mediates all model deployments, enforcing organizational policies while maintaining comprehensive audit trails.

Version control for models extends beyond storing model artifacts to encompass complete reproducibility packages that include training datasets, feature definitions, preprocessing logic, model architectures, hyperparameters, and training code. This comprehensive versioning enables teams to reproduce any historical model, supporting regulatory audits and debugging production issues.

Model metadata captures not just technical characteristics but also business context—intended use cases, known limitations, fairness evaluations, privacy impact assessments, and approval documentation. This metadata informs governance decisions about where and how models can be deployed while providing operators with context needed to troubleshoot issues.

A/B testing frameworks integrated with model registries enable gradual rollout of new models, routing controlled percentages of production traffic to challenger models while monitoring comparative performance. This capability reduces deployment risk by detecting issues before full rollout while providing statistically rigorous performance comparisons.

3.4 Observability and Monitoring Frameworks

Observability frameworks for AI systems extend beyond traditional application monitoring to address the unique characteristics of systems that learn and adapt. These frameworks track not just system health metrics but also model performance indicators, data quality measures, and business impact metrics.

Model performance monitoring compares prediction distributions against historical baselines, detecting drift that indicates degraded accuracy before business metrics suffer. For supervised learning models, monitoring systems track prediction-outcome alignment when ground truth becomes available, calculating metrics like accuracy, precision, and recall over sliding time windows. For unsupervised models and recommender systems, monitoring focuses on proxy metrics like user engagement that correlate with model effectiveness.

Data quality monitoring validates that input data conforms to expected schemas, value ranges, and distributional properties. Anomaly detection algorithms identify unusual patterns that may indicate upstream data pipeline failures, sensor malfunctions, or adversarial inputs designed to manipulate model behavior.

Explainability monitoring tracks which features most influence model predictions, detecting shifts that may indicate the model is relying on spurious correlations or protected attributes. When feature importance patterns change significantly, alerts prompt investigation to determine whether the shift reflects legitimate changes in the underlying domain or problematic model behavior.

4. Implementation Models: Organizational Approaches to AI-Native Systems

Organizations adopt diverse approaches to implementing AI-native architectures, reflecting differences in scale, regulatory requirements, existing technology investments, and organizational structure. Understanding these implementation models enables leaders to select strategies aligned with their specific contexts.

4.1 Centralized AI Architecture

Centralized AI architectures consolidate data, compute, and model development within unified platforms operated by specialized teams. This approach offers several advantages that make it attractive for organizations in early stages of AI adoption or those operating in domains with strict regulatory requirements.

Advantages of Centralization

Resource efficiency proves the most immediate benefit. Centralized architectures enable hardware sharing across projects, maximizing utilization of expensive GPU infrastructure. Rather than provisioning dedicated clusters for each team, centralized platforms implement multi-tenancy with resource scheduling that allocates compute dynamically based on demand.

Standardization simplifies governance and reduces technical debt. Centralized teams establish consistent data formats, model development frameworks, deployment patterns, and monitoring approaches. This standardization accelerates onboarding for new team members and enables knowledge transfer between projects.

Quality control becomes more tractable when specialized teams can review all models before production deployment. Centralized review processes ensure that models meet organizational standards for accuracy, fairness, robustness, and compliance before serving customer-facing decisions.

Limitations of Centralization

Organizational bottlenecks emerge as AI adoption scales. Centralized teams become overwhelmed with requests from business units, creating queues that delay projects. The central team's limited domain expertise makes it difficult to effectively prioritize competing demands or validate that solutions address actual business needs.

Innovation constraints result from standardization. Business units with unique requirements must either compromise to fit central platform capabilities or abandon AI initiatives entirely. The central team's risk aversion may prevent experimentation with emerging techniques that could provide competitive advantages.

4.2 Federated AI Architecture

Federated AI architectures distribute capability across business units and functional teams while maintaining lightweight central coordination for shared infrastructure and governance standards. This approach scales more effectively as organizations mature their AI capabilities but introduces coordination challenges.

Federated Model Characteristics

Domain teams maintain autonomy over their AI initiatives, selecting technologies, developing models, and deploying solutions independently. Central platform teams provide reusable infrastructure components—data platforms, ML frameworks, deployment automation—that domain teams can adopt voluntarily rather than being mandated.

Centers of excellence establish best practices, provide training, and facilitate knowledge sharing across federated teams without imposing rigid standardization. These centers serve consultative roles, helping domain teams navigate technical decisions while respecting their autonomy.

Governance operates through frameworks rather than gatekeeping. Central risk and compliance teams define policies covering data usage, model validation, and deployment approvals, but domain teams implement these policies within their contexts rather than requiring central review of every decision.

Organizations typically evolve from centralized to federated architectures as AI capabilities mature. The transition requires deliberate investment in platform capabilities that enable domain team autonomy while maintaining sufficient governance to manage enterprise risk.

4.3 Hybrid Cloud Strategies

Hybrid cloud architectures distribute AI workloads across on-premises infrastructure and multiple public cloud providers, balancing control, flexibility, and cost considerations. This approach has become the dominant implementation pattern for large enterprises with established data centers and complex regulatory requirements.

Workload Placement Strategies

Data sovereignty requirements frequently dictate workload placement. Organizations operating in regulated industries or multiple jurisdictions often maintain on-premises infrastructure for sensitive data while leveraging public cloud for less-sensitive workloads. Healthcare providers may train clinical decision support models on-premises using patient data while deploying operational optimization models in public cloud.

Cost optimization drives hybrid strategies for training compute. Organizations leverage reserved on-premises capacity for baseline training workloads while bursting to public cloud for periodic large-scale training jobs. This approach avoids over-provisioning on-premises infrastructure for peak demand while maintaining cost-effective utilization of owned assets.

Geographic distribution places inference infrastructure near users to minimize latency. Global enterprises deploy models to edge locations and regional cloud availability zones, routing prediction requests to nearby infrastructure. Hybrid orchestration ensures that models remain synchronized across locations while adapting to regional capacity constraints.

Technical Integration Patterns

Container orchestration platforms including Kubernetes provide consistent application deployment abstractions across hybrid environments. Organizations define deployment specifications once and execute them across on-premises clusters and managed cloud services, abstracting infrastructure differences.

Data replication strategies maintain consistency between environments while respecting data residency requirements. Change data capture streams operational database updates to cloud analytics platforms. Object storage replication synchronizes training datasets between on-premises feature stores and cloud training infrastructure.

4.4 Multi-Tenant Scalability Patterns

Multi-tenant architectures enable organizations to efficiently serve multiple business units, customer segments, or external clients from shared infrastructure while maintaining isolation, security, and performance guarantees. These patterns prove essential for platform businesses and enterprises consolidating previously siloed systems.

Isolation Strategies

Namespace isolation provides the simplest multi-tenancy model, leveraging Kubernetes namespaces or similar constructs to partition compute resources, implement access controls, and track resource consumption by tenant. This approach offers lightweight isolation suitable for trusted internal teams but provides limited protection against noisy neighbor problems or sophisticated attacks.

Virtual machine isolation provides stronger boundaries, dedicating VM instances to individual tenants. This approach prevents resource interference and provides clear security boundaries but increases infrastructure overhead and complicates resource sharing.

Data plane isolation ensures that tenant data never commingles in shared storage systems. Dedicated databases, object storage prefixes, or encryption keys per tenant prevent accidental exposure while enabling efficient resource utilization through storage deduplication and compression at infrastructure layers.

Resource Allocation Models

Fair share scheduling allocates compute resources proportional to tenant entitlements, ensuring that no tenant monopolizes shared infrastructure. Schedulers implement priority queues, preemption policies, and quota enforcement that balance competing demands while maintaining service level objectives.

Burst capacity management allows tenants to temporarily exceed base allocations during peak demand, subject to available capacity. This approach maximizes infrastructure utilization by enabling tenants to opportunistically use idle resources while maintaining guaranteed minimums.

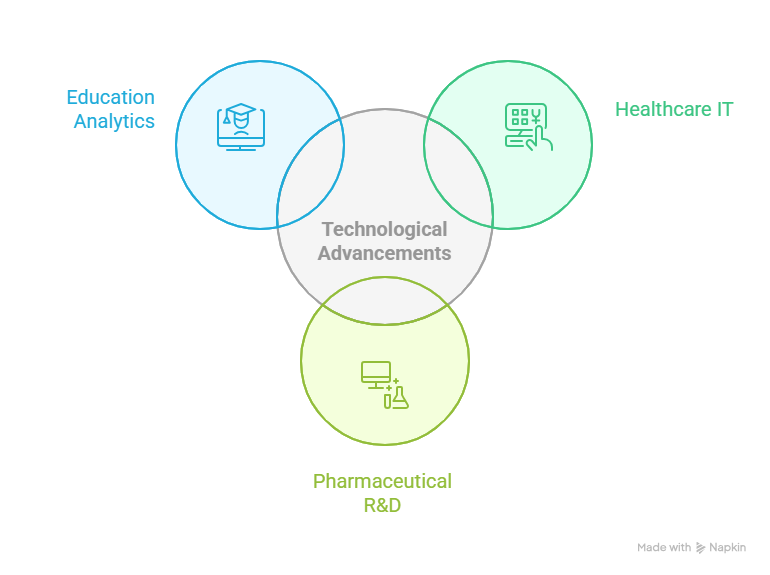

5. Cross-Domain Applications: AI-Native Systems in Practice

The theoretical foundations of AI-native architecture find expression in production systems that demonstrate both the possibilities and challenges of intelligence-first design. Examining implementations across healthcare, pharmaceutical research, and education reveals common patterns and domain-specific adaptations.

5.1 Healthcare IT: Clinical Intelligence Platforms

Healthcare organizations have implemented AI-native architectures for clinical decision support, operational optimization, and population health management. These systems exemplify the requirements and complexities of AI in high-stakes, highly regulated environments.

Providence Health System has built one of the most sophisticated clinical AI platforms in the healthcare industry, processing data from 51 hospitals and 1,000 clinics to support over 120,000 caregivers. The platform architecture unifies electronic health records, medical imaging, genomics data, remote monitoring streams, and claims information within a data fabric that enforces HIPAA compliance while enabling real-time clinical decision support.

The system deploys over 200 machine learning models covering predictive applications including sepsis detection, hospital readmission risk, surgical complication forecasting, and medication adherence prediction. Models continuously update as new patient outcomes become available, with automated retraining pipelines that maintain model performance as disease patterns and treatment protocols evolve.

Physicians using the platform's AI-enhanced documentation tools save an average of 5.33 minutes per patient visit, with 80% reporting reduced cognitive burden. This efficiency gain translates to hundreds of thousands of additional patient interactions annually across the Providence system, demonstrating how AI-native architectures create compounding value beyond individual model performance.

5.2 Pharmaceutical R&D: Discovery and Development Acceleration

Pharmaceutical companies have invested heavily in AI-native platforms for drug discovery and clinical development, seeking to reduce the traditional 10-15 year timeline and $2.6 billion average cost of bringing new therapeutics to market. These platforms integrate diverse data types—molecular structures, protein interactions, clinical trial outcomes, real-world evidence—within unified architectures that support hypothesis generation, validation, and optimization.

AI-Accelerated Drug Discovery Architectures

Modern discovery platforms implement multi-modal learning architectures that simultaneously process chemical structures, biological assay results, scientific literature, and clinical outcomes. Graph neural networks learn representations of molecular structures that capture pharmacological properties. Natural language processing models extract compound-disease relationships from millions of research publications. Generative models propose novel chemical structures optimized for desired pharmacological profiles while avoiding toxic properties.

The compound optimization workflow exemplifies AI-native design principles. Scientists specify target properties—binding affinity, bioavailability, metabolic stability, toxicity thresholds—and generative models propose candidate structures. Computational chemistry models predict properties without requiring physical synthesis. Promising candidates enter robotic synthesis and high-throughput screening, with results fed back to refine model predictions. This closed-loop system compresses discovery timelines from years to months for lead compound identification.

AI implementations have reduced drug discovery timelines by over 50% at leading pharmaceutical companies, enabling faster response to emerging diseases and more efficient use of research budgets. The COVID-19 pandemic demonstrated this capability, with AI-native platforms identifying potential therapeutic candidates within weeks of viral genome publication.

5.3 Education Analytics: Adaptive Learning Ecosystems

Educational institutions and ed-tech companies have deployed AI-native platforms that personalize learning experiences, optimize student support interventions, and provide instructors with actionable insights into learning patterns. These systems demonstrate how AI-native architectures enable mass personalization at scales impossible through manual approaches.

University of South Florida has positioned itself among the first universities to form a college dedicated to AI, cybersecurity, and computing, implementing AI-native systems for both educational delivery and institutional operations. The platform tracks student interactions across learning management systems, assessment platforms, collaboration tools, and physical attendance systems, creating comprehensive behavioral profiles that inform intervention strategies.

Predictive models identify students at risk of academic difficulty based on engagement patterns, assessment performance, help-seeking behavior, and peer collaboration metrics. Rather than waiting for midterm grades to reveal struggles, the system flags concerning patterns within weeks of semester start, enabling early intervention when corrective actions prove most effective.

The platform implements adaptive assessment that adjusts question difficulty based on demonstrated knowledge, efficiently measuring student understanding while reducing test-taking time. Item response theory models estimate student ability and question difficulty simultaneously, enabling precise measurement with fewer questions than traditional fixed-form assessments.

Automated feedback systems provide students with detailed explanations of errors, suggested resources for remediation, and practice problems targeting identified knowledge gaps. These systems scale personalized support beyond what instructors can provide individually while freeing faculty time for complex interactions that require human judgment.

6. Strategic Benefits: The AI-Native Advantage

Organizations that successfully implement AI-native architectures realize multiple categories of strategic benefit that compound over time to create sustainable competitive advantages.

6.1 Organizational Agility and Time-to-Market

AI-native architectures dramatically reduce the time required to develop, validate, and deploy new AI capabilities. Organizations with mature implementations report that data scientists can move from initial hypothesis to production deployment in days or weeks rather than months or quarters required with conventional architectures.

This acceleration stems from several architectural characteristics. Unified data platforms eliminate the weeks typically spent negotiating data access, understanding schemas, and building extraction pipelines. Feature stores provide libraries of pre-computed transformations that data scientists can reuse rather than recalculating. Automated ML pipelines handle the operational complexity of training at scale, validating models, and deploying to production. Containerized deployment abstracts infrastructure complexity, enabling data scientists to define deployment requirements declaratively rather than coordinating with infrastructure teams.

The cumulative effect transforms AI from a lengthy special project into a routine capability that organizations can apply rapidly to emerging opportunities. When competitive threats appear or market conditions shift, AI-native organizations can develop and deploy responsive systems in timeframes that AI-enabled competitors cannot match.

6.2 System Resilience and Fault Tolerance

AI-native architectures implement resilience patterns at every layer, ensuring that system intelligence degrades gracefully under adverse conditions rather than failing catastrophically. These patterns become essential as organizations increase their dependence on AI for operational decisions.

Model serving infrastructure implements circuit breakers that detect elevated error rates or latency and automatically route traffic to backup models or fallback logic. This capability prevents cascading failures when models encounter unexpected inputs or downstream dependencies become unavailable.

Feature stores maintain redundant storage and caching layers that enable inference to continue even when primary data sources experience outages. Stale feature values may reduce prediction accuracy, but continued operation with degraded accuracy proves superior to complete service unavailability.

Monitoring systems detect model performance degradation before business metrics suffer, triggering automated rollback to previously validated models. Rather than requiring human operators to notice problems and execute recovery procedures, self-healing architectures restore service automatically while alerting teams to investigate root causes.

6.3 Compliance Readiness and Governance

AI-native architectures embed governance and compliance capabilities throughout the system rather than treating them as afterthoughts. This integrated approach proves essential as regulatory scrutiny of AI systems intensifies across industries and jurisdictions.

Data lineage tracking provides comprehensive documentation of how training data flows from source systems through transformations to become model inputs. This capability enables organizations to demonstrate compliance with data usage restrictions, respond to data subject access requests, and validate that models were trained on authorized data.

Model cards document intended use cases, training data characteristics, performance across demographic subgroups, known limitations, and approval status. These standardized documentation artifacts satisfy regulatory requirements while helping downstream teams understand appropriate model applications.

Explainability infrastructure generates human-interpretable explanations for individual predictions, enabling organizations to satisfy "right to explanation" regulations while helping users trust and effectively use AI recommendations. Rather than implementing explanation as a separate capability, AI-native architectures make it a standard component of model serving.

Automated validation workflows enforce that models meet organizational standards before production deployment. Statistical tests verify that models don't exhibit prohibited disparate impact across protected groups. Adversarial robustness evaluations confirm that models resist manipulation. Performance benchmarks ensure that new models meet accuracy thresholds. These validations occur automatically as part of deployment pipelines, preventing non-compliant models from reaching production.

6.4 Total Cost of Ownership Optimization

While AI-native architectures require substantial initial investment, they ultimately deliver lower total cost of ownership than AI-enabled approaches that layer intelligence onto legacy systems. These savings accrue from multiple sources.

Infrastructure efficiency improves through workload consolidation and resource sharing. Rather than provisioning dedicated infrastructure for each AI project, organizations maximize utilization of expensive GPU and specialized inference accelerators across multiple teams and applications.

Operational leverage increases as automation eliminates manual workflows. Traditional ML deployments require data engineers to build custom extraction pipelines, data scientists to manually track experiments, ML engineers to containerize models, and operations teams to configure monitoring. AI-native platforms automate these workflows, enabling smaller teams to support larger AI portfolios.

Technical debt reduction occurs through standardization and abstraction. Organizations avoid accumulating a proliferation of incompatible technologies, custom integration code, and undocumented deployment procedures that require ongoing maintenance. Platform evolution occurs centrally, allowing all applications to benefit from improvements without individual migration projects.

Faster time-to-value reduces opportunity costs and accelerates return on AI investments. Organizations that can deploy AI capabilities in weeks rather than quarters realize revenue benefits sooner and can iterate more frequently to optimize business impact.

7. Vision 2030: The AI-Native Enterprise Ecosystem

The trajectory of AI-native architecture through 2030 points toward increasingly autonomous, adaptive, and interconnected enterprise systems that fundamentally reshape organizational operations and competitive dynamics.

Autonomous Operations at Scale

By 2030, AI-native enterprises will operate increasingly autonomous systems that monitor their own performance, identify optimization opportunities, and execute improvements with minimal human intervention. These systems will extend beyond today's narrow automation to exhibit broad operational autonomy across supply chains, customer service, financial operations, and product development.

Autonomous supply chains will optimize sourcing, production, inventory, and distribution in response to real-time signals including demand patterns, supply disruptions, capacity constraints, and cost fluctuations. Rather than requiring human operators to monitor dashboards and adjust parameters, these systems will continuously learn optimal policies and execute decisions at machine speed.

Self-optimizing cloud infrastructure will automatically provision, scale, and decommission resources based on application requirements and cost objectives. Rather than implementing static autoscaling rules, intelligent infrastructure will forecast demand patterns, pre-provision capacity to meet anticipated load, and experiment with configuration alternatives to minimize cost while maintaining performance service levels.

Federated Learning and Privacy-Preserving AI

Privacy regulations and data sovereignty requirements will drive adoption of federated learning architectures where models train across distributed datasets without centralizing sensitive information. Healthcare systems will collaboratively develop diagnostic models that learn from millions of patients without sharing individual health records. Financial institutions will improve fraud detection through shared intelligence while protecting customer transaction details.

These architectures will implement cryptographic techniques including homomorphic encryption and secure multi-party computation that enable model training on encrypted data. Organizations will contribute to collective intelligence while maintaining cryptographic guarantees that their proprietary data remains confidential.

AI-Native Ecosystems and Marketplaces

Enterprise AI will evolve from isolated organizational capabilities to interconnected ecosystems where organizations share models, datasets, and specialized infrastructure through marketplaces and industry consortia. Rather than each organization independently developing similar capabilities, shared AI infrastructure will enable specialization and knowledge transfer.

Industry-specific foundation models will serve as starting points for fine-tuning, dramatically reducing the data and compute required to develop specialized capabilities. Healthcare providers will fine-tune clinical models pre-trained on millions of de-identified patient records. Financial services firms will adapt risk models trained on decades of market data.

These ecosystems will implement reputation systems, quality certifications, and liability frameworks that enable safe adoption of third-party AI capabilities. Organizations will confidently integrate external models into production systems, knowing that providers maintain ongoing performance monitoring and accept responsibility for failures.

Integration with The AI Operating System

AI-native architecture serves as the technical foundation that enables The AI Operating System vision of intelligence-infused enterprise operations. Where The AI Operating System describes the conceptual framework for how organizations will operate in the age of artificial intelligence, AI-native architecture provides the concrete implementation blueprint.

The convergence of these concepts will manifest in enterprise platforms that seamlessly integrate human expertise with machine intelligence. Knowledge workers will interact with AI systems that understand organizational context, learn from collective experience, and augment human decision-making across every operational domain. The distinction between "using AI" and "performing work" will dissolve as intelligence becomes embedded in every workflow, interface, and process.

By 2030, the competitive landscape will divide sharply between organizations that successfully implemented AI-native architectures and those that continued layering AI capabilities onto legacy foundations. The former will operate with agility, efficiency, and intelligence that the latter cannot match, regardless of their AI budgets or talent density. The architectural decisions organizations make today will determine which side of this divide they occupy.

8. Conclusion: Building the Intelligent Enterprise Foundation

AI-native enterprise architecture represents more than a technology upgrade—it constitutes a fundamental reimagining of how organizations structure their technical foundations to compete in an intelligence-driven economy. The transition from AI-enabled to AI-native systems parallels historical shifts from mainframes to client-server architectures and from on-premises to cloud infrastructure, but with implications that extend more deeply into operational DNA.

The evidence is unambiguous: organizations investing in AI-native architectures realize threefold superior returns compared to those implementing surface-level AI adoption. They deploy capabilities in weeks that competitors require quarters to deliver. They scale intelligence across operations without proportional increases in cost or complexity. They adapt to market disruptions with speed that creates insurmountable competitive advantages.

The architectural components examined throughout this analysis—data fabrics, ML pipelines, model registries, observability frameworks—represent proven patterns that organizations can implement today. The implementation models—centralized, federated, hybrid—provide strategic options that accommodate diverse organizational contexts and maturity levels. The cross-domain applications in healthcare, pharmaceuticals, and education demonstrate that AI-native architectures deliver measurable value across industries and use cases.

The path forward requires deliberate commitment from organizational leadership, sustained investment in technical capabilities, and cultural transformation that embraces continuous learning and adaptation. Organizations that treat AI-native architecture as a multi-year strategic initiative rather than a tactical technology project position themselves to lead their industries through 2030 and beyond.

The infrastructure investments that hyperscale technology companies are making—$315 billion collectively in 2025—signal their conviction that AI-native architectures will define competitive positioning for decades. Enterprises across industries face the same imperative: build the intelligent infrastructure foundation today or cede competitive ground to organizations that do.

For organizations ready to undertake this transformation, the synthesis of AI-native architecture principles with The AI Operating System framework provides a comprehensive roadmap. Together, these concepts define both the technical implementation and the operational model for intelligent enterprises that will dominate their markets in the coming decade.